First Google gradually improved its WaveNet text-to-speech neural network to the point where it sounds almost perfectly human. Then they introduced Smart Reply which suggests possible replies to your emails. So it’s no surprise that they’ve announced an enhancement for Google Assistant called Duplex which can have phone conversations for you.

What is surprising is how well it works, as you can hear below. The first is Duplex calling to book an appointment at a hair salon, and the second is it making reservation’s with a restaurant.

Note that this reverses the roles when talking to a computer on the phone. The computer is the customer who calls the business, and the human is on the business side. The goal of the computer is to book a hair appointment or reserve a table at a restaurant. The computer has to know how to carry out a conversation with the human without the human knowing that they’re talking to a computer. It’s for communicating with all those businesses which don’t have online booking systems but instead use human operators on the phone.

Not knowing that they’re talking to a computer, the human will therefore speak as it would with another human, with all the pauses, “hmm”s and “ah”s, speed, leaving words out, and even changing the context in mid-sentence. There’s also the problem of multiple meanings for a phrase. The “four” in “Ok for four” can mean 4 pm or four people.

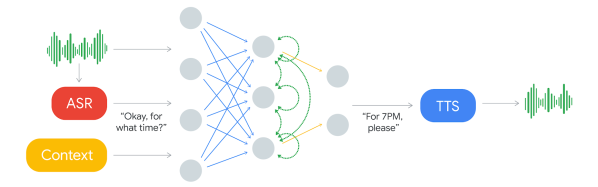

The component which decides what to say is a recurrent neural network (RNN) trained on many anonymized phone calls. The input is: the audio, the output from Google’s automatic speech recognition (ASR) software, and context such as the conversation’s history and the parameters of the conversation (e.g. book places at a restaurant, for how many, when), and more.

Producing the speech is done using Google’s text-to-speech technologies, Wavenet and Tacotron. “Hmm”s and “ah”s are inserted for a more natural sound. Timing is also taken into account. “Hello?” gets an immediate response. But they introduce latency when responding to more complex questions since replying too soon would sound unnatural.

There are limitations though. If it decides it can’t complete a task then it hands the conversation over to a human operator. Also, Duplex can’t handle a general conversation. Instead, multiple instances are trained on different domains. So this isn’t the singularity which we’ve talked about before. But if you’re tired of talking to computers at businesses, maybe this will provide a little payback by having the computer talk to the business instead.

On a more serious note, would you want to know if the person you were speaking to was in fact a computer? Perhaps Google should preface each conversation with “Hi! This is Google Assistant calling.” And even knowing that, would you want to have a human conversation with a computer, knowing that it’s “um”s were artificial? This may save time for the person whom the call is on behalf of, but the person being called may wish the computer would be a little more computer-like and speak more efficiently. Let us know your thoughts in the comments below. Or just check out the following Google I/O ’18 keynote presentation video where all this was announced.

Continue reading “Google’s Duplex AI Has Conversation Indistinguishable From Human’s”

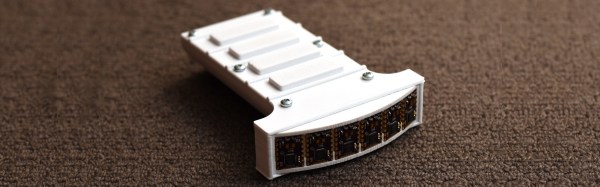

His source code is available on request but he does detail a neat software trick he uses for rotating the view. It may be confusing for some but as you move through the maze, your viewpoint rotates so that up is always the direction you’re facing. Luckily, the walls surrounding the user can be represented using 8-bits, four for east, west, north, and south, and four more for the corners. The maze is stored as a bitmap and from it, 8-bit values are extracted for the current position, each bit representing a wall around the position. To rotate the walls to match the user’s current orientation, the bits are simply shifted as needed. Then they’re shifted out to set each LED. Check it out in the video below.

His source code is available on request but he does detail a neat software trick he uses for rotating the view. It may be confusing for some but as you move through the maze, your viewpoint rotates so that up is always the direction you’re facing. Luckily, the walls surrounding the user can be represented using 8-bits, four for east, west, north, and south, and four more for the corners. The maze is stored as a bitmap and from it, 8-bit values are extracted for the current position, each bit representing a wall around the position. To rotate the walls to match the user’s current orientation, the bits are simply shifted as needed. Then they’re shifted out to set each LED. Check it out in the video below.

He uses a very clever arrangement of six sensors to get four virtual strings. Each sensor scans a 25-degree field of view. Three adjacent sensors are used to define a string, with the string being in the overlap of the outer two of those sensors. The middle sensor is used for the distance data.

He uses a very clever arrangement of six sensors to get four virtual strings. Each sensor scans a 25-degree field of view. Three adjacent sensors are used to define a string, with the string being in the overlap of the outer two of those sensors. The middle sensor is used for the distance data.