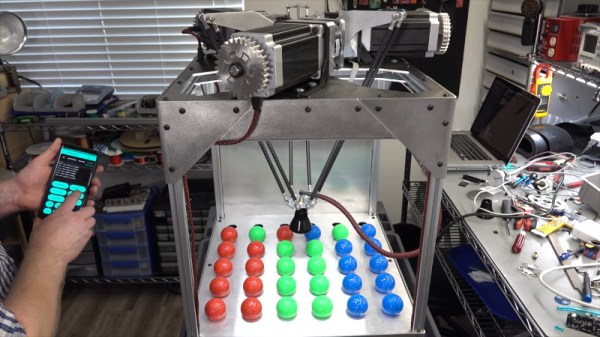

It’s a common situation faced by every hard-working American – you get home after a long day at the calcium mines, and find yourself stuck with a pile of colored golf balls that simply aren’t going to sort themselves. Finally, you can put away your sorting funnels and ball-handling gloves – [Anthony] has the solution.

That’s right – it’s a delta robot, tasked with the job of sorting golf balls by color. A Pixy2 object tracking camera is used to survey the table, with the delta arms twitching around to allow the camera to get an unobstructed view. Once the position of the balls is known, a bubble sort is run and the balls rearranged into their correct color order.

[Anthony] readily admits the bubble sort is very inefficient at this task; it was an intentional choice so it could be later compared with other sorting methods. [Anthony] also goes into detail, sharing the development process of the suction gripper as well as discussing damping methods to reduce noise.

Delta machines are always fun to watch, and are a good choice for sorting machines. We’ve seen some really tiny ones, too. Video after the break.

Continue reading “Delta Robot Is Sorting Golf Balls And Taking Names”