Anyone who’s ever owned an older car will know the feeling: the nagging worry at the back of your mind that today might be the day that something important actually stops working. Oh, it’s not the little problems that bother you: the rips in the seats, the buzz out of the rear speakers, and that slow oil leak that might have annoyed you at first, but eventually just blend into the background. So long as the car starts and can get you from point A to B, you can accept the sub-optimal performance that inevitably comes with age. Someday the day will come when you can no longer ignore the mounting issues and you’ll have to get a new vehicle, but today isn’t that day.

Looking at developments over the last few years one could argue that the International Space Station, while quite a bit more advanced and costly than the old beater parked in your driveway, is entering a similar phase of its lifecycle. The first modules of the sprawling orbital complex were launched all the way back in 1998, and had a design lifetime of just 15 years. But with no major failures and the Station’s overall condition remaining stable, both NASA and Russia’s Roscosmos space agency have agreed to several mission extensions. The current agreement will see crews living and working aboard the Station until 2030, but as recently as January, NASA and Roscosmos officials were quoted as saying a further extension isn’t out of the question.

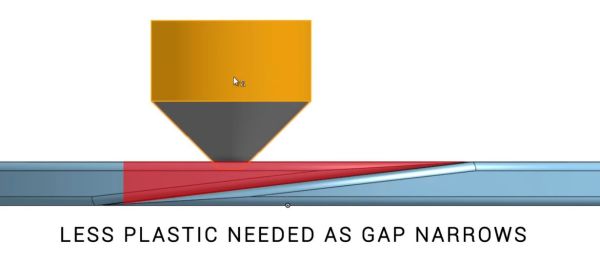

Still, there’s no debating that the ISS isn’t in the same shape it was when construction was formally completed in 2011. A perfect case in point: the fact that the rate of air leaking out of the Russian side of the complex has recently doubled is being treated as little more than a minor annoyance, as mission planners know what the problem is and how to minimize the impact is has on Station operations.

Continue reading “On An Aging Space Station, Air Leaks Become Routine”