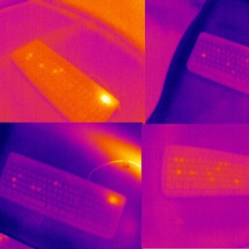

An age-old vulnerability of physical keypads is visibly worn keys. For example, a number pad with digits clearly worn from repeated use provides an attacker with a clear starting point. The same concept can be applied to keyboards by using a thermal camera with the help of machine learning, but it also turns out that some types of keys and typing styles are harder to read than others.

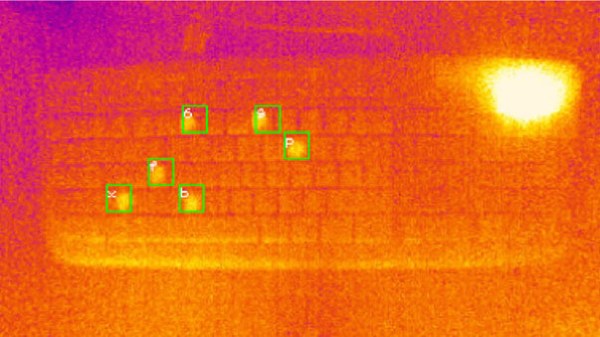

Touching a key with a fingertip imparts a slight amount of body heat, and that small amount of heat can be spotted by a thermal sensor. We’ve seen this basic approach used since at least 2005, and two things have changed since then: thermal cameras gotten much more common, and researchers discovered that by combining thermal readings with machine learning, it’s possible to eke out slight details too difficult or subtle to spot by human eye and judgement alone.

Here’s a link to the research and findings from the University of Glasgow, which shows how even a 16 symbol password can be attacked with an average accuracy of 55%. Shorter passwords are much easier to decipher, with the system attacking 6 and 8 symbol passwords with an accuracy between 92% and 80%, respectively. In the study, thermal readings were taken up to a full minute after the password was entered, but sooner readings result in higher accuracy.

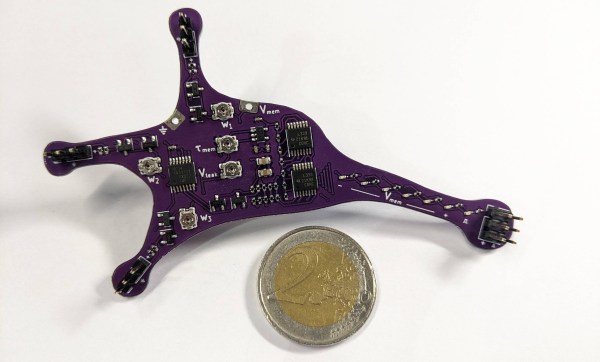

A few things make things harder for the system. Fast typists spend less time touching keys, and therefore transfer less heat when they do, making things a little more challenging. Interestingly, the material of the keycaps plays a large role. ABS keycaps retain heat far more effectively than PBT (a material we often see in custom keyboard builds like this one.) It also turns out that the tiny amount of heat from LEDs in backlit keyboards runs effective interference when it comes to thermal readings.

Amusingly this kind of highly modern attack would be entirely useless against a scramblepad. Scramblepads are vintage devices that mix up which numbers go with which buttons each time the pad is used. Thermal imaging and machine learning would be able to tell which buttons were pressed and in what order, but that still wouldn’t help! A reminder that when it comes to security, tech does matter but fundamentals can matter more.