I can’t remember how exactly I came across the OrbiTouch keyboard, but it’s been on my list to clack about for a long time. Launched in 2003, the OrbiTouch is a keyboard and mouse in one. It’s designed for people who can’t keyboard regularly, or simply want a different kind of experience.

The OrbiTouch was conceived of by a PhD student who started to experience carpal tunnel while writing papers. He spent fifteen years developing the OrbiTouch and found that it could assist many people who have various upper body deficiencies. So, how does it work?

It’s Like Playing Air Hockey with Both Hands

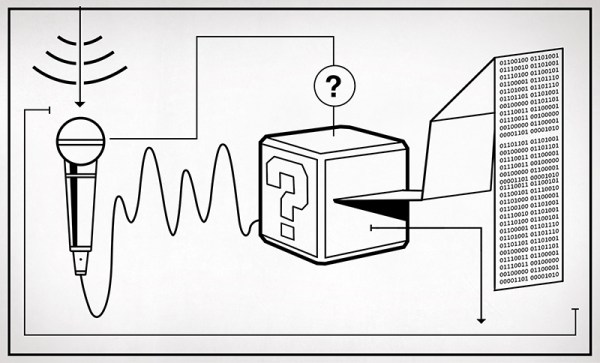

To use this keyboard, you put both hands on the sliders and move them around. They are identical eight-way joysticks or D-pads, essentially. The grips sort of resemble a mouse and have what looks like a special resting place for your pinky.

One slider points to groups of letters, numbers, and special characters, and the other chooses a color from a special OrbiTouch rainbow. Pink includes things like parentheses and their cousins along with tilde, colon and semi-colon. Black is for the modifiers like Tab, Alt, Ctrl, Shift, and Backspace. These special characters and modifiers aren’t shown on the hieroglyphs slider, you just have to keep the guide handy until you memorize the placement of everything around the circle.

The alphabet is divided up into groups of five letters which are color-coded in rainbow order that starts with orange, because red is reserved for the F keys. So for instance, A is orange, B is yellow, C is green, D is blue, E is purple, then it starts back over with F at orange. If you wanted to type cab, for instance, you would start by moving the hieroglyph slider to the first alphabet group and the color slider to green.

Continue reading “Inputs Of Interest: The OrbiTouch Keyless Keyboard And Mouse”