Stop motion animation is often called a lost art, as doing it (or at least, doing it well) is incredibly difficult and time consuming. Every detail on the screen, no matter how minute, has to be placed by human hands hundreds of times so that it looks smooth when played back at normal speed. The unique look of stop motion is desirable enough that it still does get produced, but it’s far less common than hand drawn or even computer animation.

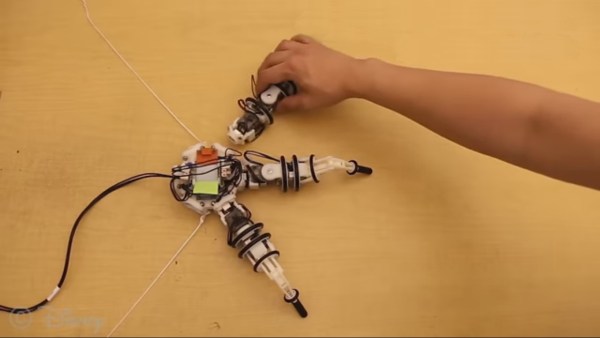

If you ever wanted to know just how much work goes into producing even a few minutes of stop motion animation, look no farther than the fascinating work of [Special Krio]. He not only documented the incredible attention to detail required to produce high quality animation with this method, but also the creation of his custom robotic character.

If you ever wanted to know just how much work goes into producing even a few minutes of stop motion animation, look no farther than the fascinating work of [Special Krio]. He not only documented the incredible attention to detail required to produce high quality animation with this method, but also the creation of his custom robotic character.

Characters in stop motion animation often have multiple interchangeable heads to enable switching between different expressions. But with his robotic character, [Special Krio] only has to worry about the environments, and allow his mechanized star do the “acting”. This saves time, which can be used for things such as making 45 individual resin “drops” to animate pouring a cup of tea (seriously, go look).

To build his character, [Special Krio] first modeled her out of terracotta to get the exact look he wanted. He then used a DIY 3D laser scanner to create a digital model, which in turn he used to help design internal structures and components which he 3D printed on an Ultimaker. The terracotta original was used once again when it was time to make molds for the character’s skin, which was done with RTV rubber. Then it was just the small matter of painting all the details and making her clothes. All told, the few minutes of video after the break took years to produce.

This isn’t the first time we’ve seen 3D printing used to create stop motion animation, but the final product here is really in a league of its own.

[Thanks to Antonio for the tip.]

Continue reading “Handmade Robot Brings Stop Motion To Life” →

In this age of

In this age of