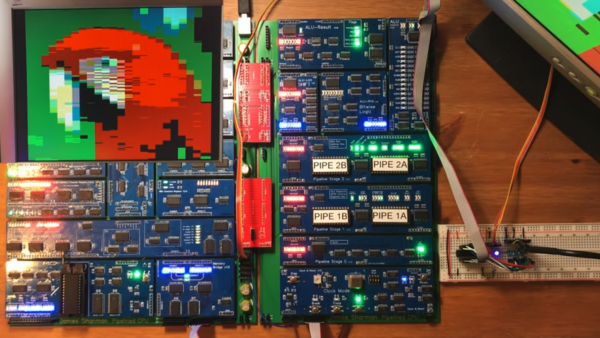

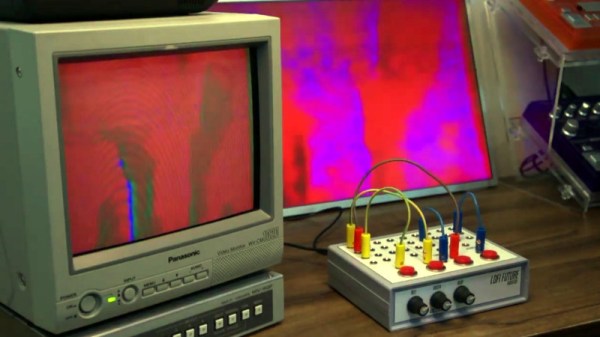

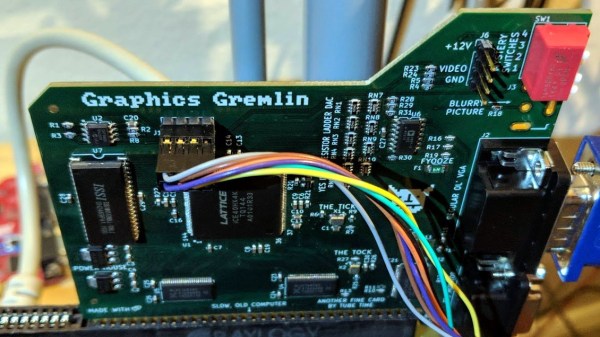

[James Sharman] has built an impressive 8-bit homebrew computer. Based on TTL logic chips, it has a pipelined design which makes it capable of Commodore-level computing, but [James] hasn’t quite finished everything yet. While it is currently built on its own custom PCB, it has a limiting LCD display which isn’t up to the standards of the rest of the build. To resolve this issue, he decided to implement VGA from scratch.

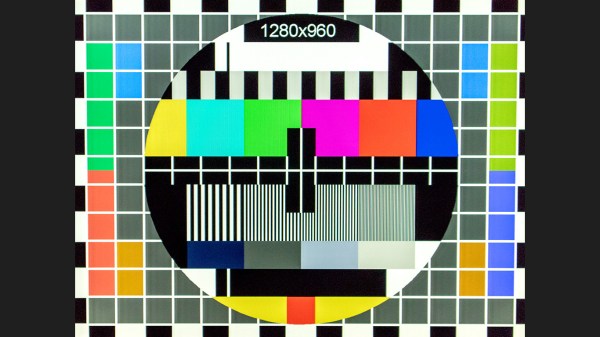

This isn’t a bit-bang VGA implementation, either. He plans for full resolution (640×480) which will push the limits of his hardware. He also sets goals of a 24-bit DAC which will allow for millions of colors, the ability to use sprites, and hardware scrolling. Since he’s doing all of this from scratch, the plan is to keep it as simple as possible and make gradual improvements to the build as he goes. To that end, the first iteration uses a single latching chip with some other passive components. After adding some code to the CPU to support the new video style, [James] is able to display an image on his monitor.

While the image of the parrot he’s displaying isn’t exactly perfect yet, it’s a great start for his build and he does plan to make improvements to it in future videos. We’d say he’s well on his way to reproducing a full 8-bit retrocomputer. Although VGA is long outdated for modern computers, the standard is straightforward to implement and limited versions can even be done with very small microcontrollers.

Thanks to [BaldPower] for the tip!

Continue reading “VGA From Scratch On A Homebrew 8-bit Computer”