Despite their claims of innocence, we all know that the big tech firms are listening to us. How else to explain the sudden appearance of ads related to something we’ve only ever spoken about, seemingly in private but always in range of a phone or smart speaker? And don’t give us any of that fancy “confirmation bias” talk — we all know what’s really going on.

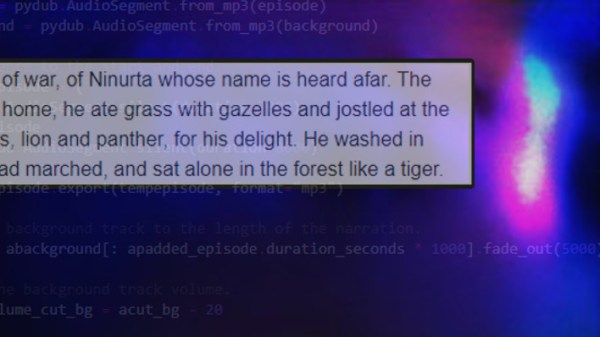

And now, to make matters worse, it turns out that just listening to your keyboard clicks could be enough to decode what’s being typed. To be clear, [Georgi Gerganov]’s “KeyTap3” exploit does not use any of the usual RF-based methods we’ve seen for exfiltrating data from keyboards on air-gapped machines. Rather, it uses just a standard microphone to capture audio while typing, building a cluster map of the clicks with similar sounds. By analyzing the clusters against the statistical likelihood of certain sequences of characters appearing together — the algorithm currently assumes standard English, and works best on clicky mechanical keyboards — a reasonable approximation of the original keypresses can be reconstructed.

If you’d like to see it in action, check out the video below, which shows the algorithm doing a pretty good job decoding text typed on an unplugged keyboard. Or, try it yourself — the link above implements KeyTap3 in-browser. We gave it a shot, but as a member of the non-mechanical keyboard underclass, it couldn’t make sense of the mushy sounds it heard. Then again, our keyboard inferiority affords us some level of protection from the exploit, so there’s that.

Editors Note: Just tried it on a mechanical keyboard with Cherry MX Blue switches and it couldn’t make heads or tails of what was typed, so your mileage may vary. Let us know if it worked for you in the comments.

What strikes us about this is that it would be super simple to deploy an exploit like this. Most side-channel attacks require such a contrived scenario for installing the exploit that just breaking in and stealing the computer would be easier. All KeyTap needs is a covert audio recording, and the deed is done.

Continue reading “Audio Eavesdropping Exploit Might Make That Clicky Keyboard Less Cool”