If you’re like us, you spend more time than you care to admit staring at a computer screen. Whether it’s trying to find the right words for a blog post or troubleshooting some code, the end result is the same: an otherwise normally functioning human being is reduced to a slack-jawed zombie. Wouldn’t it be nice to be able to quantify just how much of your life is being wasted basking in the flickering glow of your monitor? Surely that wouldn’t be a crushingly depressing piece of information to have at the end of the week.

With the magic of modern technology, you need wonder no longer. Prolific hacker [dekuNukem] has created the aptly named “facepunch”, which allows you to “punch in” with nothing more than your face. Just sit down in front of your Raspberry Pi’s camera, and the numbers start ticking away. It’s like the little clock in the front of a taxi: except at the end you don’t have to pay anyone, you just have to come to terms with what your life has become. So that’s cool.

With the magic of modern technology, you need wonder no longer. Prolific hacker [dekuNukem] has created the aptly named “facepunch”, which allows you to “punch in” with nothing more than your face. Just sit down in front of your Raspberry Pi’s camera, and the numbers start ticking away. It’s like the little clock in the front of a taxi: except at the end you don’t have to pay anyone, you just have to come to terms with what your life has become. So that’s cool.

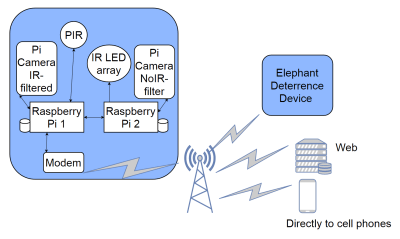

It doesn’t take much hardware to play along at home. All you need is a Raspberry Pi and the official camera accessory. Though for the full effect you should add one of the displays supported by the Luma.OLED driver so you can see the minutes and hours ticking away in real-time.

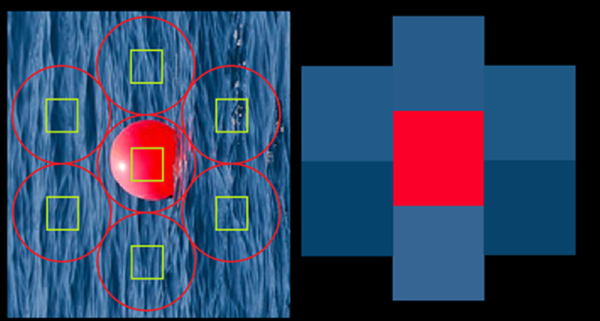

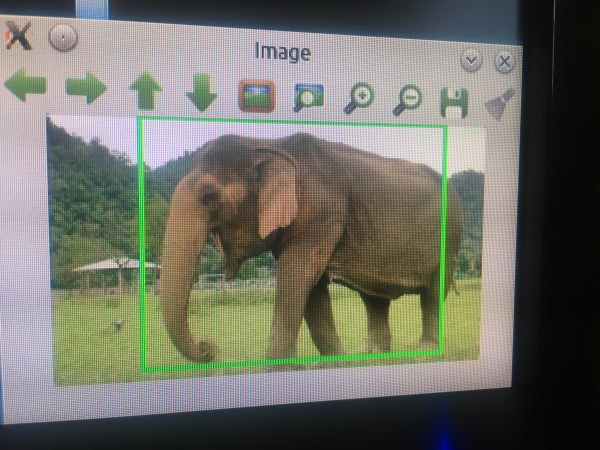

To get the facial recognition going, all you need to do is take a well-lit picture of your face and save it as a 400×400 JPEG. The Python 3 script will take care of the rest: checking the frames from the camera every few seconds to see if your beautiful mug is in the frame, and incrementing the counters accordingly.

Even if you’re not in the market for an Orwellian electronic supervisor, this project is a great example to get you started in the world of facial recognition. With a little luck, you’ll be weaponizing it in no time.

[Bjørn Karmann]’s

[Bjørn Karmann]’s