[AlexMiller11] shared a project for a DIY gesture-sensing remote control that acts like a Bluetooth keyboard, capable of controlling media and presentations on a computer with a high degree of accuracy.

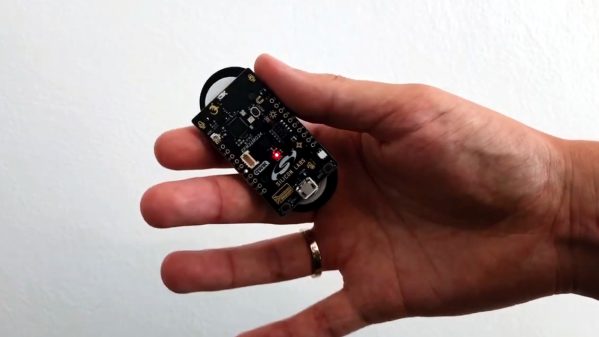

The hardware is a Silicon Labs xG24 dev kit, a small IoT-focused board able to be powered by a CR2032 cell. Part of what makes it all work is the six-axis IMU sensor, but the rest is the software to interpret that data and figure out what motions the user is trying to do. That happens with a Neuton.AI model and SDK, a tiny but effective machine learning framework for small devices.

How does it actually work? The device acts as a Bluetooth HID, and gets connected to a PC in the same was as a regular Bluetooth keyboard. Once that’s done, recognized gestures are printed out the serial port as well as sent via Bluetooth to the host machine. Media can then be played, paused, volume adjusted, presentations controlled, and more. More details are on the project’s GitHub repository. There’s also a demo video that explains exactly what’s going on, embedded below the page break.

Machine learning is a way of using software to solve the kinds of problems humans are not very good at writing programs to solve, and accurate gesture recognition is a good example. Not all such applications require heaps of overheating GPUs, either. We’ve seen the concept of a neural network stripped down to its bare essentials running on an Arduino Uno, for those who would like to better appreciate the fundamentals.

Continue reading “Compact, Gesture-Based Remote Control Over Bluetooth”