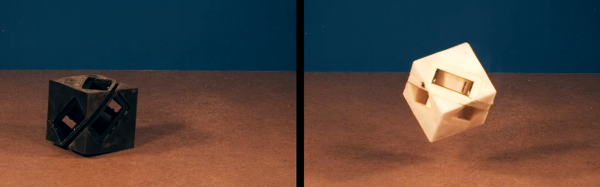

MIT’s Computer Science and Artificial Intelligence Laboratory, CSAIL, put out a paper recently about an interesting advance in 3D printing. Naturally, being the computer science and AI lab the paper had a robotic bend to it. In summary, they can 3D print a robot with a rubber skin of arbitrarily varying stiffness. The end goal? Shock absorbing skin!

They modified an Objet printer to print simultaneously using three materials. One is a UV curing solid. One is a UV curing rubber, and the other is an unreactive liquid. By carefully depositing these in a pattern they can print a material with any property they like. In doing so they have been able to print mono body robots that, simply put, crash into the ground better. There are other uses of course, from joints to sensor housings. There’s more in the paper.

We’re not sure how this compares to the Objet’s existing ability to mix flexible resins together to produce different Shore ratings. Likely this offers more seamless transitions and a wider range of material properties. From the paper it also appears to dampen better than the alternatives. Either way, it’s an interesting advance and approach. We wonder if it’s possible to reproduce on a larger scale with FDM.

Hackaday reader [gratian] tipped us off about the course available from

Hackaday reader [gratian] tipped us off about the course available from

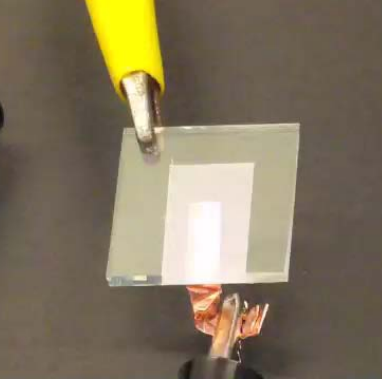

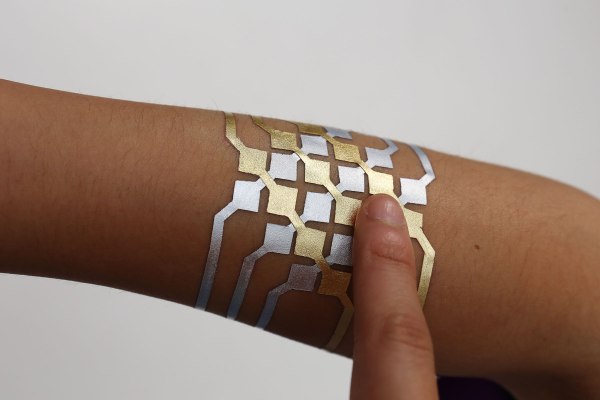

Generally speaking, gold leaf is incredibly fragile. In this process to yield the cleanest looking leaf the gold is not actually cut. Instead, the temporary tattoo film and backer are cut on a standard desktop vinyl cutter. The gold leaf is then applied to the entire film surface. The cut film/leaf can then be “weeded” — removing the unwanted portions of film which were isolated from the rest by the cutting process — to complete the temporary tattoo. The team tested this method and found that traces 4.5 mm or more thick were resilient enough to last the entire day on your skin.

Generally speaking, gold leaf is incredibly fragile. In this process to yield the cleanest looking leaf the gold is not actually cut. Instead, the temporary tattoo film and backer are cut on a standard desktop vinyl cutter. The gold leaf is then applied to the entire film surface. The cut film/leaf can then be “weeded” — removing the unwanted portions of film which were isolated from the rest by the cutting process — to complete the temporary tattoo. The team tested this method and found that traces 4.5 mm or more thick were resilient enough to last the entire day on your skin.