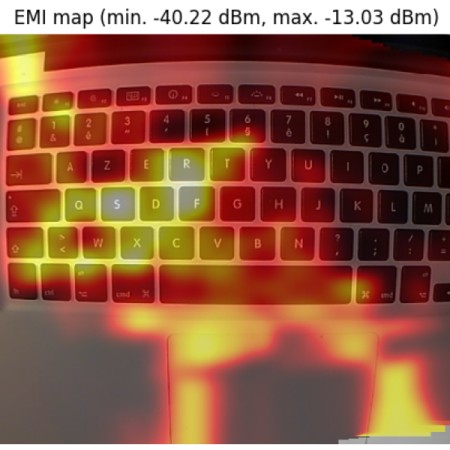

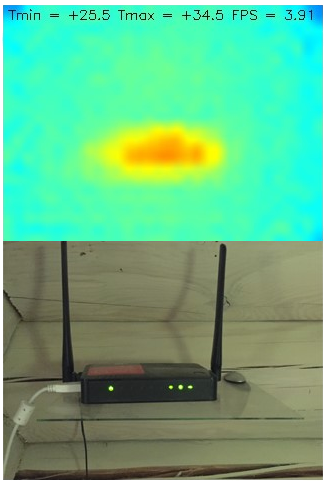

It’s one thing to know that your device is leaking electromagnetic interference (EMI), but if you really want to solve the problem, it might be helpful to know where the emissions are coming from. This heat-mapping EMI probe will answer that question, with style. It uses a webcam to record an EMI probe and the overlay a heat map of the interference on the image itself.

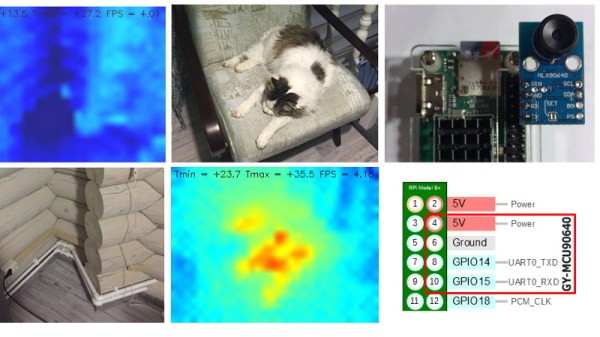

Regular readers will note that the hardware end of [Charles Grassin]’s EMI mapper bears a strong resemblance to the EMC probe made from semi-rigid coax we featured recently. Built as a cheap DIY substitute for an expensive off-the-shelf probe set for electromagnetic testing, the probe was super simple: just a semi-rigid coax jumper with one SMA plug lopped off and the raw end looped back and soldered. Connected to an SDR dongle, the probe proved useful for tracking down noisy circuits.

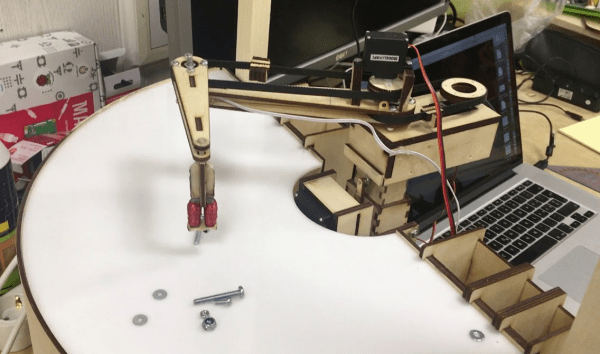

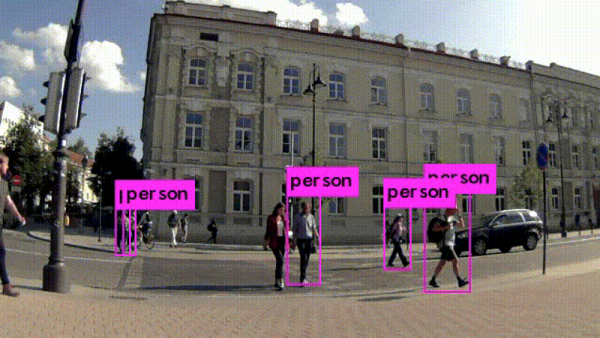

[Charles]’ project takes that a step further by adding a camera that looks down upon the device under test. OpenCV is used to track the probe, which is moved over the DUT manually with the help of an augmented reality display that helps track coverage, with a Python script recording its position and the RF power measurements. The video below shows the capture process and what the data looks like when reassembled as an overlay on top of the device.

Even if EMC testing isn’t your thing, this one seems like a lot of fun for the curious. [Charles] has kindly made the sources available on GitHub, so this is a great project to just knock out quickly and start mapping.

Continue reading “Camera Sees Electromagnetic Interference Using An SDR And Machine Vision”

In Ningbo, cameras oversee the intersections, and use facial-recognition to shame offenders by putting their faces up on large displays for all to see, and presumably mutter “tsk-tsk”. So it shocked Dong Mingzhu, the chairwoman of China’s largest air conditioner firm, to see her own face on the wall of shame when she’d done nothing wrong. The

In Ningbo, cameras oversee the intersections, and use facial-recognition to shame offenders by putting their faces up on large displays for all to see, and presumably mutter “tsk-tsk”. So it shocked Dong Mingzhu, the chairwoman of China’s largest air conditioner firm, to see her own face on the wall of shame when she’d done nothing wrong. The