No one wants to design consumer electronics that last longer than a few years. This trend is an ecological disaster, with millions of tons of computers, printers, fax machines and cell phones ending up in landfills. In these landfills, all the lead and chemicals used to extract minuscule amounts of gold plating leech into the environment. Turning it all around is monumental, but reusing some of this waste can help make a difference.

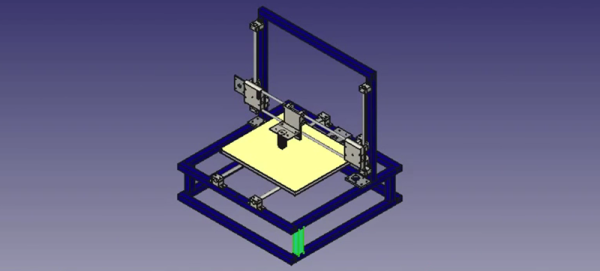

[Masterperson] and [Maaphoo] have been working on a way to turn those tons of e-waste into something useful. They’ve come up with a framework for turning e-waste into 3D printers. With a clever application of Python and FreeCAD Macros, this project can generate a model of a 3D printer using motors, shafts, and bearings taken from discarded 2D printers.

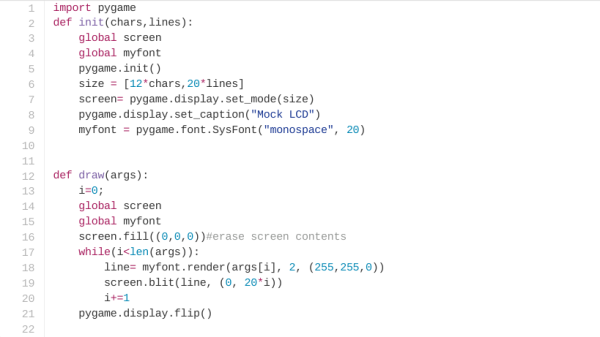

Right now a printer can be configured by adding the parts you have on hand to a configuration file, running a Python macro in FreeCAD, and waiting until the macro generates the parts to build a cartesian bot. This macro also spits out the files for the parts that need to be printed, and can interface with Plater to optimize the placement of these printed parts on an existing printer.

It’s a very cool project, but it’s not done yet: the team is looking for help to refine the printer designs and possibly growing more designs than a simple cartesian bot. Anything that is explicitly designed to pick the meat off of 2D printers is a great idea, and turning those into real 3D printers is the cherry on top.