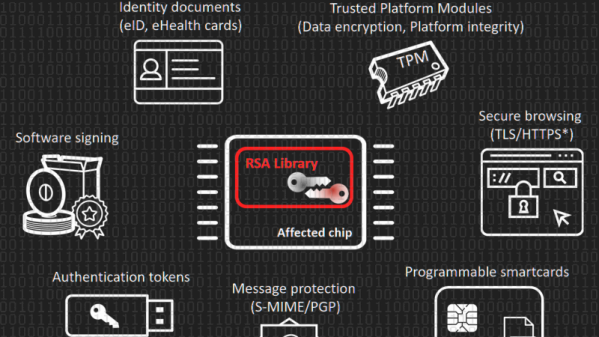

Our own [Brian Benchoff] asked this same question just six months ago in a similar headline. At that time, the answer was no. Or kind of no. Some exploits existed but with some preconditions that limited the impact of the bugs found in Intel Management Engine (IME). But 2017 is an unforgiving year for the blue teams, as lot of serious bugs have been found throughout the year in virtually every fields of computing. Researchers from Positive Technologies report that they found a flaw that allows them to execute unsigned code on computers running the IME. The cherry on top of the cake is that they are able to do it via a USB port acting as a JTAG port. Does this mean the zombie apocalypse is coming?

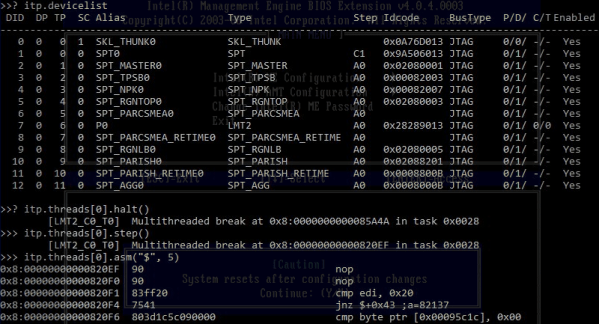

Before the Skylake CPU line, released in 2015, the JTAG interface was only accessible by connecting a special device to the ITP-XDP port found on the motherboard, inside a computer’s chassis. Starting with the Skylake CPU, Intel replaced the ITP-XDP interface and allowed developers and engineers to access the debugging utility via common USB 3.0 ports, accessible from the device’s exterior, through a new a new technology called Direct Connect Interface (DCI). Basically the DCI provides access to CPU/PCH JTAG via USB 3.0. So the researchers manage to debug the IME processor itself via USB DCI, which is pretty awesome, but USB DCI is turned off by default, like one of the researchers states, which is pretty good news for the ordinary user. So don’t worry too much just yet.