While the Unix operating systems Solaris and HP-UX are still in active development, they’re not particularly popular anymore and are mostly relegated to some enterprise and data center environments They did enjoy a peak of popularity in the 90s during the “wild west” era of windowed operating systems, though. This was a time when there were more than two mass-market operating systems commercially available, with many companies fighting for market share. This led to a number of efforts to get software written for one operating system to run on others, whether that was simply porting software directly or using some compatibility layer. Surprisingly enough it was possible in this era to run an entire instance of Mac System 7 within either of these two Unix operating systems, and this was an officially supported piece of Apple software.

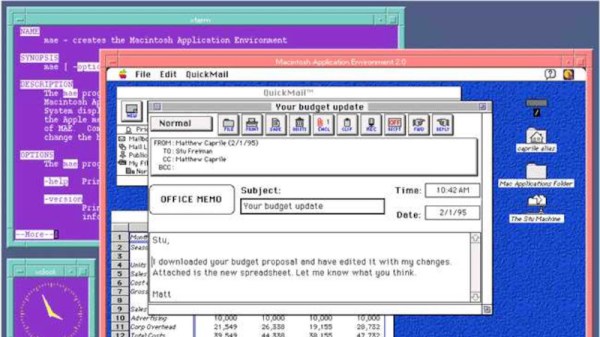

The software was called the Macintosh Application Environment (MAE), and was an effort by Apple to bring Macintosh System 7 applications to various Unix-based operating systems, including Solaris and HP-UX. This was a time before Apple’s OS was Unix-compliant, and MAE provided a compatibility layer that translated Macintosh system calls and application programming interfaces (APIs) into the equivalent Unix calls, allowing Mac software to function within the Unix environments. [Lunduke] outlines a lot of the features of this in his post, including some of the details the “scaffolding” allowing the 68k processor to be emulated efficiently on the hardware of the time, the contents of the user manual, and even the memory management and layout.

What’s really jarring to anyone only familiar with Apple’s modern “walled garden” approach is that this is an Apple-supported compatibility layer for another system. At the time, though, they weren’t the technology giant they are today and had to play by a different set of rules to stay viable. Quite the opposite, in fact: they almost went out of business in the mid-90s, so having their software run on as many machines as possible would have been a perk at the time. While this era did have major issues with cross-platform compatibility, there was some software that attempted to solve these problems that are still in active development today.

Thanks to [Stephen] for the tip!