Building a retro computer of some sort is a rite of passage for many of us, with some building replicas or restorations of old Commodores, Ataris, and other machines from decades past. Others go even further back, to the time of the Intel 8008 or earlier, and a dedicated few will build something completely novel. This project from [3DSage] falls squarely in the latter category, with his completely DIY computer built component by component from scratch, including the machine code needed to run it.

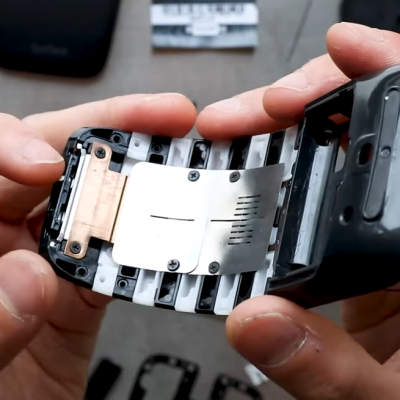

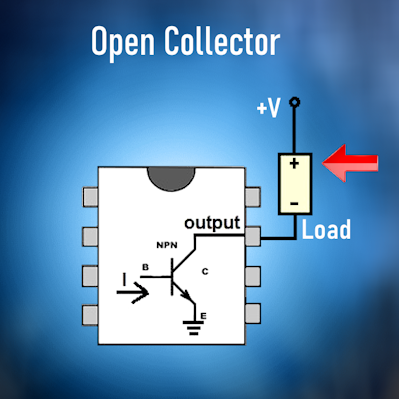

[3DSage] starts with the backbone of every computer: the clock. He first demonstrates how a pair of NOT gates with a set of capacitors can be used as a rudimentary clock pulse, then builds a more refined version with a 555 timer and potentiometer for adjustable rates. Then, it’s on to creating a binary counter, which is a fundamental part of the memory system for this small computer, and finally, allows this circuitry to behave like a normal computer. Using a set of switches to store values in memory and stepping through them with the clock, the computer can be programmed to do plenty of tasks just like a modern microcontroller.

[3DSage] built this project a few years ago and has used it for real-world applications such as controlling servos, LED arrays, playing music, and other tasks. Although he has to program it using his own machine code by hand, it’s a usable computer in many ways. If you want to eschew modernity and build a retro computer in the style of the 1960s, though, this piece goes through what it would have been like to build a similar system in the era when these computers were more common. If you have a switch fetish, you might like to see how real computers worked back then, too.