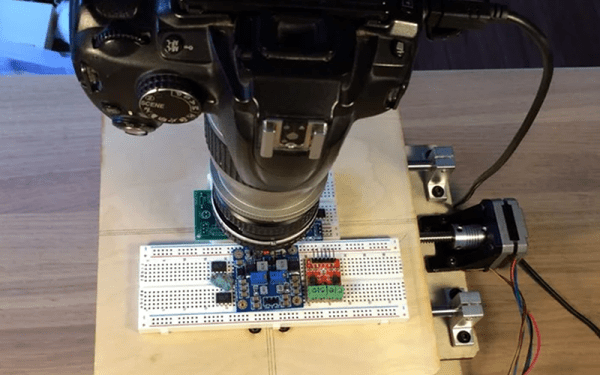

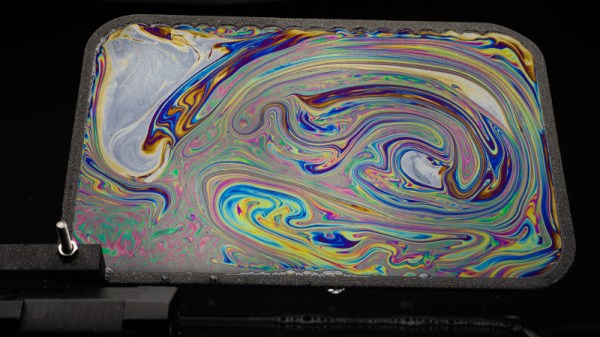

[JBumstead] didn’t want an ordinary microscope. He wanted one that would show the big picture, and not just in a euphemistic sense, either. The problem though is one of resolution. The higher the resolution in an image — typically — the narrower the field of view given the same optics, which makes sense, right? The more you zoom in, the less area you can see. His solution was to create a microscope using a conventional camera and building a motion stage that would capture multiple high-resolution photographs. Then the multiple photos are stitched together into a single image. This allows his microscope to take a picture of a 90x60mm area with a resolution of about 15 μm. In theory, the resolution might be as good as 2 μm, but it is hard to measure the resolution accurately at that scale.

As an Arduino project, this isn’t that difficult. It’s akin to a plotter or an XY table for a 3D printer — just some stepper motors and linear motion hardware. However, the base needs to be very stable. We learned a lot about the optics side, though.

Continue reading “Gigapixel Microscope Reveals Tiny Parts Of The Big Picture”