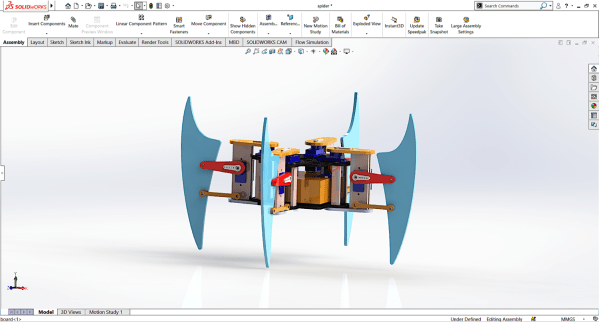

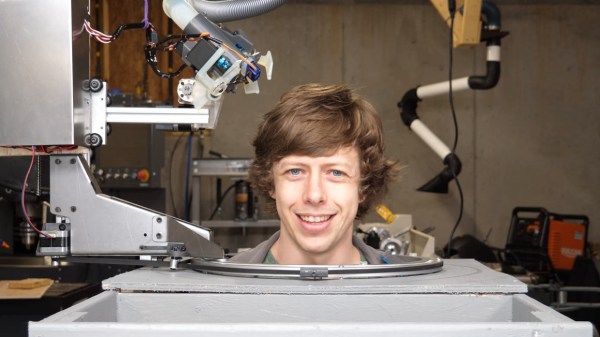

Who doesn’t love robotic spiders? Today’s biomimetic robot comes in the form of Miles, the quadruped spider robot from [_Robox].

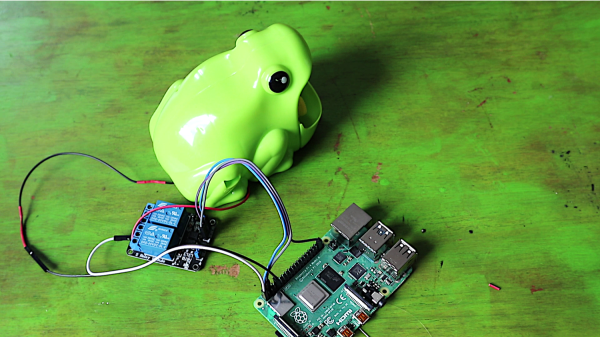

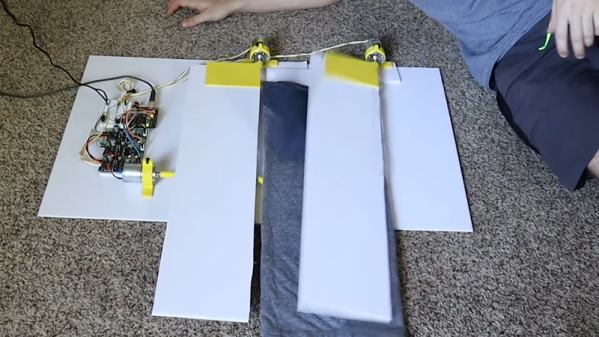

Miles uses twelve servos to control its motion, three on each of its legs, and also includes a standard HC-SR04 ultrasonic distance sensor for some obstacle avoidance capabilities. Twelve servos can use quite a bit of power, so [_Robox_] had to power Miles with six LM7805 ICs to get sufficient current. [_Robox_] laser cut acrylic sheets for Miles’s body but mentions that 3D printing would work as well.

Miles uses inverse kinematics to get around, which we’ve seen in a previous project and is a pretty popular technique for controlling robotic motion. The Instructable is a little light on the details, but the source code is something to take a look at. In addition to simply moving around [_Robox_] developed code to make Miles dance, wave, and take a bow. That’s sure to be a hit at your next virtual show-and-tell.

By now you’re saying “wait, spiders have eight legs”, and of course you’re right. But that’s an awful lot of servos. Anyway, if you’d rather 3D print your four-legged spider, we have a suggestion.