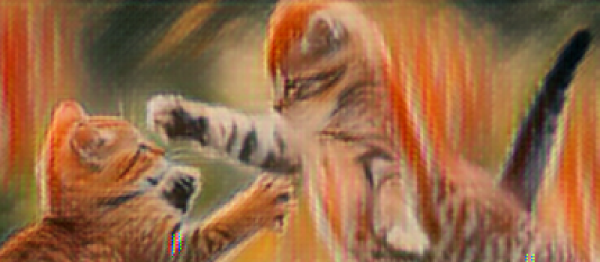

The good people at MIT’s Computer Science and Artificial Intelligence Laboratory [CSAIL] have found a way of tricking Google’s InceptionV3 image classifier into seeing a rifle where there actually is a turtle. This is achieved by presenting the classifier with what is called ‘adversary examples’.

Adversary examples are a proven concept for 2D stills. In 2014 [Goodfellow], [Shlens] and [Szegedy] added imperceptible noise to the image of a panda that from then on was classified as gibbon. This method relies on the image being undisturbed and can be overcome by zooming, blurring or rotating the image.

The applicability for real world shenanigans has been seriously limited but this changes everything. This weaponized turtle is a color 3D print that is reliably misclassified by the algorithm from any point of view. To achieve this, some knowledge about the classifier is required to generate misleading input. The image transformations, such as rotation, scaling and skewing but also color corrections and even print errors are added to the input and the result is then optimized to reliably mislead the algorithm. The whole process is documented in [CSAIL]’s paper on the method.

What this amounts to is camouflage from machine vision. Assuming that the method also works the other way around, the possibility of disguising guns (or anything else) as turtles has serious implications for automated security systems.

As this turtle targets the Inception algorithm, it should be able to fool the DIY image recognition talkbox that Hackaday’s own [Steven Dufresne] built.

Thanks to [Adam] for the tip.