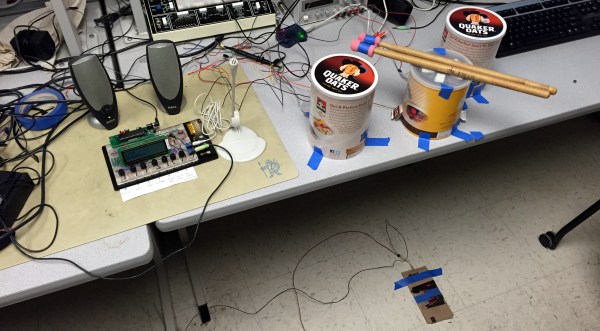

Ithaca-based power trio [Nick, Roshun, and Ian] share a love of music and beating on things with drum sticks. To that end (and for class credit), they built a Digitally-Recordable, User-Modifiable Sound Emitting Tool (DRUMSET) using force-sensing resistors housed in oatmeal cans.

Anyone who has dealt with FSRs knows how persnickety they can be. In order to direct the force and avoid false positives, these enterprising beat purveyors suspended a sawed-off 2-liter bottle to the underside of each lid. This directs the force coming in from their patent-pending foam-enhanced drum sticks to the small, round sensing area of the FSR. There’s just enough space between the cap and the FSR to account for the play in the oatmeal can lid drum head when struck.

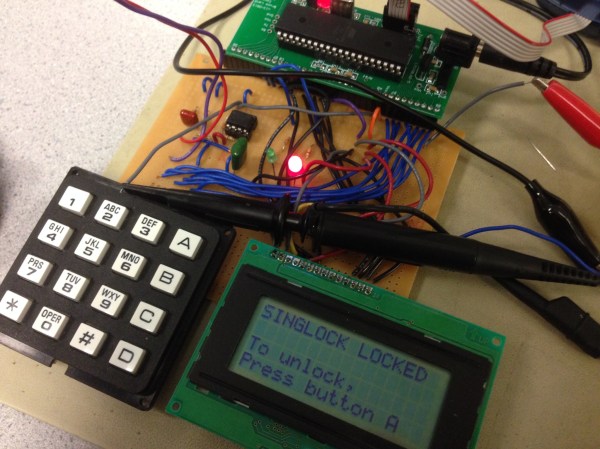

DRUMSET offers different-sounding kits at the push of a momentary switch. At present, there are four pre-programmed kits: the acoustic and electronic foursomes you’d expect, and a kit of miscellaneous sounds like hand claps and wooden claves that sound like something They Might Be Giants would have used on their first album. The fourth is called ‘Smoke on Water’, and is exactly what it sounds like. Should you tire of these, DRUMSET has a program mode with around 20 samples. These can be cycled through on the LCD and assigned to any of the four drums.

The microphone is for record mode, and whatever is recorded can be mapped to any drum. The memory limitations of the ‘1284P make for a 0.2 second sample of whatever is barked into the mic, but that’s plenty of time for shouting ‘hack!’ or firing off whatever hilarious bodily sound one can muster. We think this four track-like functionality of DRUMSET has interesting recording and live performance implications. The team’s future plans include space for longer samples and more robust drum construction (although it is possible to do this without any drums whatsoever). They’d also like to add more drums in case Neil Peart calls. The beat goes on after the break.