If you haven’t been following [Will Cogley]’s animatronic adventures on YouTube, you’re missing out. He’s got a good thing going, and the latest step is an adorable robot that tracks you with its own eyes.

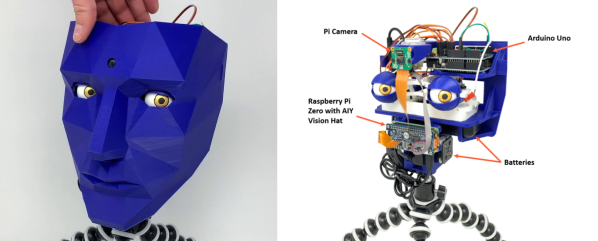

Yes, the cameras are embedded inside the animatronic eyes.That was a lot easier than expected; rather than the redesign he was afraid of [Will] was able to route the camera cable through his existing animatronic mechanism, and only needed to hollow out the eyeball. The tiny camera’s aperture sits nigh-undetectable within the pupil.

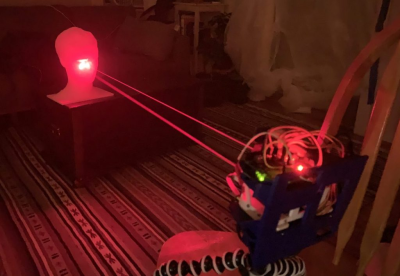

On the software side, face tracking is provided by MediaPipe. It’s currently running on a laptop, but the plan is to embed a Raspberry Pi inside the robot at a later date. MediaPipe tracks any visible face and calculates the X and Y offset to direct the servos. With a dead zone at the center of the image and a little smoothing, the eye motion becomes uncannily natural. [Will] doesn’t say how he’s got it set up to handle more than one face; likely it will just stick with the first object identified.

Eyes aren’t much by themselves, so [Will] goes further by creating a little robot. The adorable head sits on a 3D-printed tapered roller bearing atop a very simple body. Another printed mechanism allows for pivot, and both axes are servo-controlled, bringing the total number of motors up to six. Tracking prefers eye motion, and the head pivots to follow to try and create a naturalistic motion. Judge for yourself how well it works in the video below. (Jump to 7:15 for the finished product.)

We’ve featured [Will]’s animatronic anatomy adventures before– everything from beating hearts, and full-motion bionic hands, to an earlier, camera-less iteration of the eyes in this project.

Don’t forget if you ever find yourself wading into the Uncanny Valley that you can tip us off to make sure everyone can share in the discomfort.

In Ningbo, cameras oversee the intersections, and use facial-recognition to shame offenders by putting their faces up on large displays for all to see, and presumably mutter “tsk-tsk”. So it shocked Dong Mingzhu, the chairwoman of China’s largest air conditioner firm, to see her own face on the wall of shame when she’d done nothing wrong. The

In Ningbo, cameras oversee the intersections, and use facial-recognition to shame offenders by putting their faces up on large displays for all to see, and presumably mutter “tsk-tsk”. So it shocked Dong Mingzhu, the chairwoman of China’s largest air conditioner firm, to see her own face on the wall of shame when she’d done nothing wrong. The