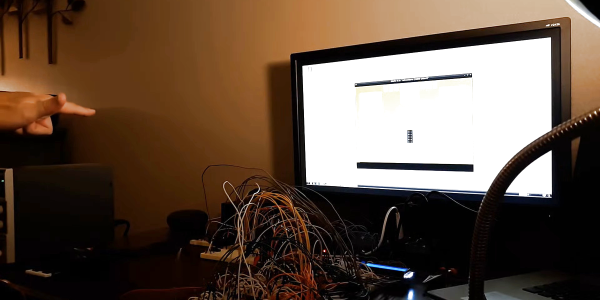

OpenCV is an open source library of computer vision algorithms, its power and flexibility made many machine vision projects possible. But even with code highly optimized for maximum performance, we always wish for more. Which is why our ears perk up whenever we hear about a hardware accelerated vision module, and the latest buzz is coming out of the OpenCV AI Kit (OAK) Kickstarter campaign.

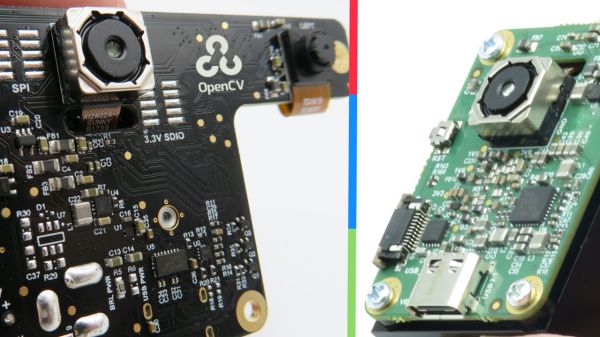

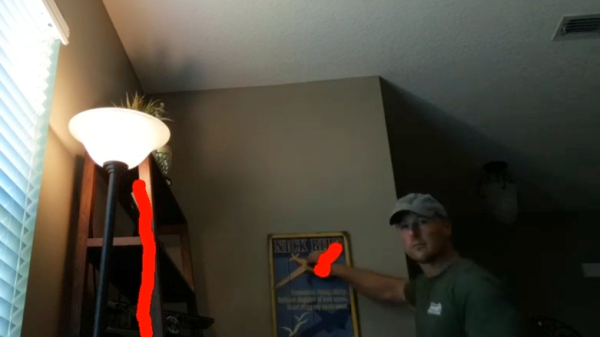

There are two vision modules launched with this campaign. The OAK-1 with a single color camera for two dimensional vision applications, and the OAK-D which adds stereo cameras for that third dimension. The onboard brain is a Movidius Myriad X processor which, according to team members who have dug through its datasheet, have been massively underutilized in other products. They believe OAK modules will help the chip fulfill its potential for vision applications, delivering high performance while consuming low power in a small form factor. Reading over the spec sheet, we think it’s fair to call these “Ultimate Myriad X Dev Boards” but we must concede “OpenCV AI Kit” sounds better. It does not provide hardware acceleration for the entire OpenCV library (likely an impossible task) but it does cover the highly demanding subset suitable for Myriad X acceleration.

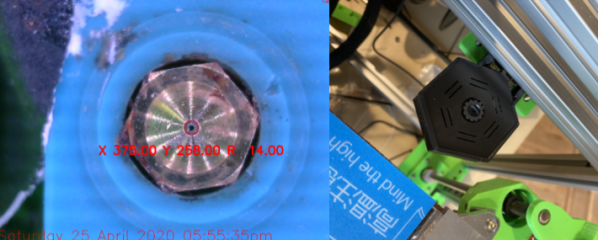

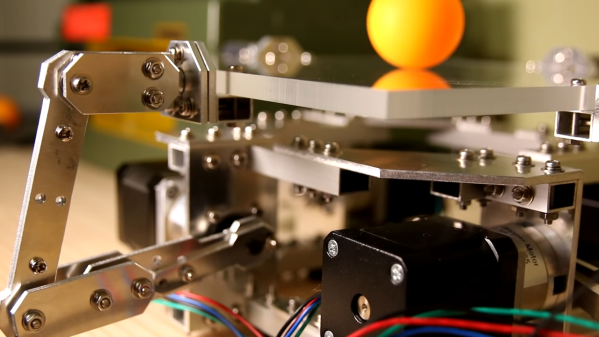

Since the campaign launched a few weeks ago, some additional information have been released to help assure backers that this project has real substance. It turns out OAK is an evolution of a project we’ve covered almost exactly one year ago that became a real product DepthAI, so at least this is not their first rodeo. It is also encouraging that their invitation to the open hardware community has already borne fruit. Check out this thread discussing OAK for robot vision, where a question was met with an honest “we don’t have expertise there” from the OAK team, but then ArduCam pitched in with their camera module experience to help.

We wish them success for their planned December 2020 delivery. They have already far surpassed their funding goals, they’ve shipped hardware before, and we see a good start to a development community. We look forward to the OAK-1 and OAK-D joining the ranks of other hacking friendly vision modules like OpenMV, JeVois, StereoPi, and AIY Vision.