Found yourself with a shiny new NVIDIA Jetson Nano but tired of having it slide around your desk whenever cables get yanked? You need a stand! If only there was a convenient repository of options that anyone could print out to attach this hefty single-board computer to nearly anything. But wait, there is! [Madeline Gannon]’s accurately named jetson-nano-accessories repository supports a wider range of mounting options that you might expect, with modular interconnect-ability to boot!

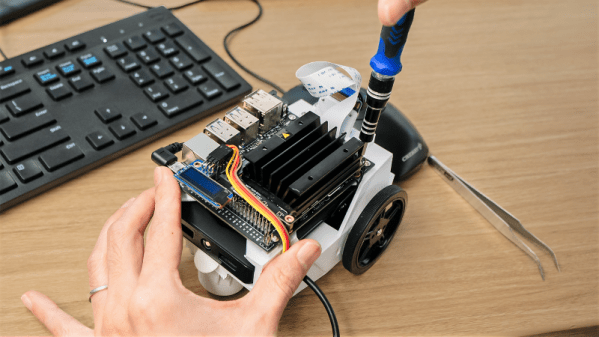

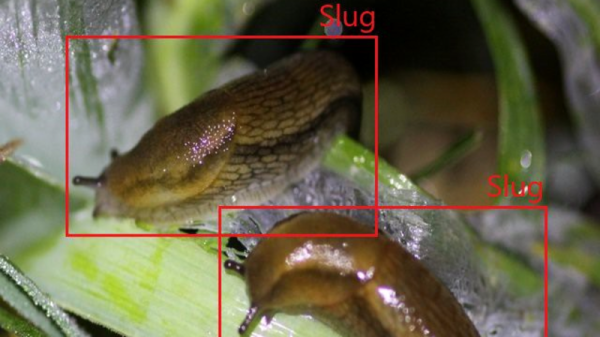

A device like the Jetson Nano is a pretty incredible little System On Module (SOM), more so when you consider that it can be powered by a boring USB battery. Mounted to NVIDIA’s default carrier board the entire assembly is quite a bit bigger than something like a Raspberry Pi. With a huge amount of computing power and an obvious proclivity for real-time computer vision, the Nano is a device that wants to go out into the world! Enter these accessories.

At their core is an easily printable slot-and-tab modular interlock system which facilitates a wide range of attachments. Some bolt the carrier board to a backplate (like the gardening spike). Others incorporate clips to hold everything together and hang onto a battery and bicycle. And yes, there are boring mounts for desks, tripods, and more. Have we mentioned we love good documentation? Click into any of the mount types to find more detailed descriptions, assembly directions, and even dimensioned drawings. This is a seriously professional collection of useful kit.