It seems to be a perennial feature of our wider community of hackers and makers, that television production companies come up with new ideas for shows featuring us and our skills. Whether it is a reality maker show, a knockout competition, a scavenger hunt, or any other format, it seems that there is always a researcher from one TV company or another touting around the scene for participants in some new show.

These shows are entertaining and engaging to watch, and we’ve all probably wondered how we might do were we to have a go ourselves. Fame and fortune awaits, even if only during one or two episodes, and sometimes participants even find themselves launched into TV careers. Americans may be familiar with [Joe Grand], for instance, and Brits will recognise [Dick Strawbridge].

It looks as if it might be a win-win situation to be a TV contestant on a series filmed in exotic foreign climes, but it’s worth taking a look at the experience from another angle. What you see on the screen is the show as its producer wants you to see it, fast-paced and entertaining. What you see as a competitor can be entirely different, and before you fill in that form you need to know about both sides.

A few years ago I was one member of a large team of makers that entered the UK version of a very popular TV franchise. The experience left me with an interest in how TV producers craft the public’s impression of an event, and also with a profound distrust of much of what I see on my screen. This prompted me to share experiences with those people I’ve met over the years who have been contestants in other similar shows, to gain a picture of the industry from more than just my personal angle. Those people know who they are and I thank them for their input, but because some of them may still be bound by contract I will keep both their identities and those of the shows they participated in a secret. It’s thus worth sharing some of the insights gleaned from their experiences, so that should you be interested in having a go yourself, you are forewarned. Continue reading “Hacking On TV: What You Need To Know”

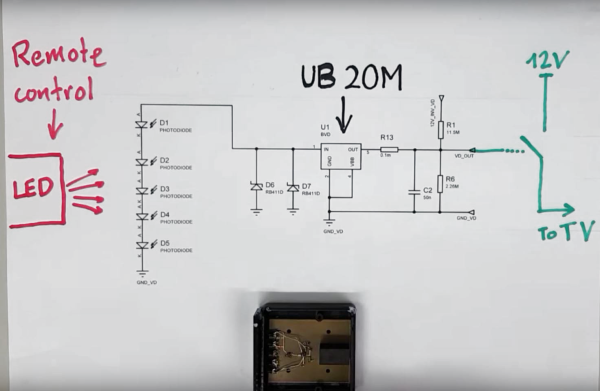

[Marble] wrote his flow for PAL, but it should be fairly easy to modify it to work with NTSC if you’re living in the US or Japan. Sending black and white is “easy” but to transmit a full color image, you’ll need to read up on color spaces. Check out [marble]’s

[Marble] wrote his flow for PAL, but it should be fairly easy to modify it to work with NTSC if you’re living in the US or Japan. Sending black and white is “easy” but to transmit a full color image, you’ll need to read up on color spaces. Check out [marble]’s