Everyone knows plastic trash is a problem with junk filling up landfills and scattering beaches. It’s worse because rather than dissolving completely, plastic breaks down into smaller chunks of plastic, small enough to be ingested by birds and fish, loading them up with indigestible gutfill. Natural disasters compound the trash problem; debris from Japan’s 2011 tsunami washed ashore on Vancouver Island in the months that followed.

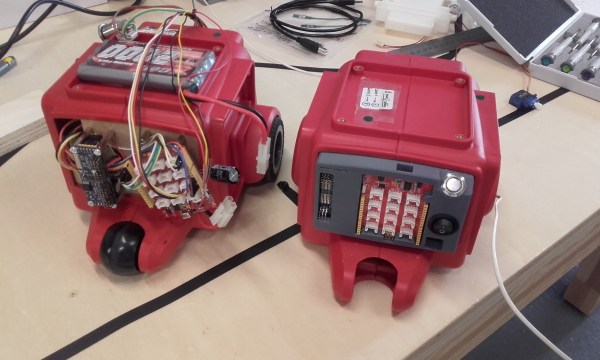

Erin Kennedy was walking along Toronto Island beach and noticed the line of plastic trash that extended as far as the eye could see. As an open source robot builder, her first inclination was to use robots to clean up the mess. A large number of small robots following automated routines might be able to clear a beach faster and more efficiently than a person walking around with a stick and a trash bag.

Erin founded Robot Missions to explore this possibility, with the goal of uniting open-source “makers” — along with their knowledge of technology — with environmentalists who have a clearer understanding of what needs to be done to protect the Earth. It was a finalist in the Citizen Science category for the 2016 Hackaday Prize, and would fit very nicely in this year’s Wheels, Wings, and Walkers challenge which closes entries in a week.

Join me after the break for a look at where Robot Missions came from, and what Erin has in store for the future of the program.

Continue reading “Rovers To The Rescue: Robot Missions Tackles Trash” →