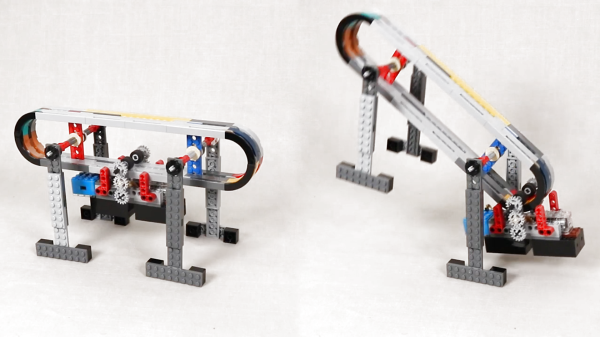

Lego Technic is a wonderful thing, making it easy to toy around with all manner of complicated mechanical assemblies without needing to do any difficult fabrication. [touthomme] recently posted one such creation to Reddit – a walker design that is rather unconventional.

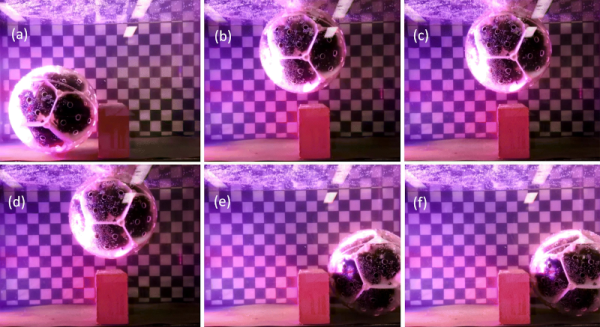

The design dispenses with individually-actuated legs entirely. Instead, the two front legs are joined by an axle which pivots the legs about the body, which is shaped like an oval track. The rear legs are the same. A motorized carriage then travels along the oval track. When the weighted carriage reaches the front of the oval track, it forces the body to tip forwards, pivoting around the front legs and flipping the entire body over, swinging the rear legs forwards to become the front. The cycle then repeats again.

The flipping design, inspired by a toy, is something you wouldn’t expect to see in nature, as few to no animals have evolved mechanisms capable of continual rotation like this. It’s also unlikely to be a particularly efficient way of getting around, and the design would certainly struggle to climb stairs.

Some may claim the method of locomotion is useless, but we don’t like to limit our imaginations in that way. If you can think of a situation in which this walker design would be ideal, let us know in the comments. Alternatively, consider other walking designs for your own builds. Video after the break.