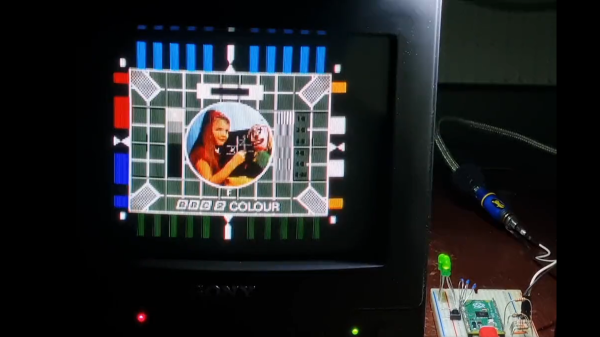

As CRT televisions have faded from use, it’s become important for retro gaming enthusiasts to get their hands on one for that authentic experience. Alongside that phenomenon has been a resurgence of some of the hacks we used to do to CRT TV sets back in the day, as [Adrian’s Digital Basement] shows us when he adds an RGB interface to a mid-1990s Sony Trinitron.

Those of us lucky enough to have lived in Europe at the time were used to TVs with SCART sockets by the mid-1990s so no longer needed to plumb in RGB signals, but it appears that Americans were still firmly in the composite age. The TV might have only had a composite input, but this hack depends on many the video processor chips of the era having RGB input pins. If your set has a mains-isolated power supply then these pins can be hooked up with relative ease.

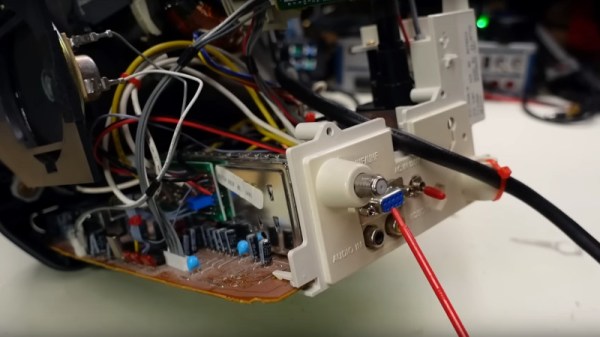

In the case of this little Sony, the RGB lines were used by the integrated on-screen display. He takes us through the process of pulling out these lines and interfacing to them, and comes up with a 9-pin D connector with the same pinout as a Commodore monitor, wired to the chip through a simple RC network and a sync level divider. There’s also a switch that selects RGB or TV mode, driving the OSD blanking pin on the video processor.

We like this hack just as much as we did when we were applying it to late-80s British TV sets, and it’s a great way to make an old TV a lot more useful. You can see it in the video below the break, so get out there and find a late-model CRT TV to try it on while stocks last!

Unsurprisingly, this mod has turned up here a few times in the past.