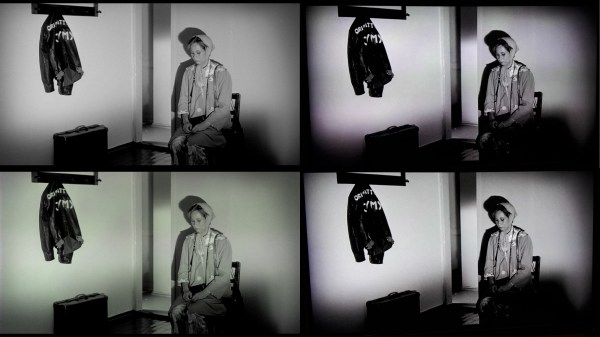

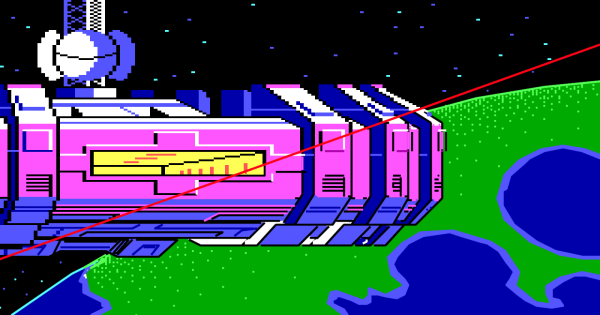

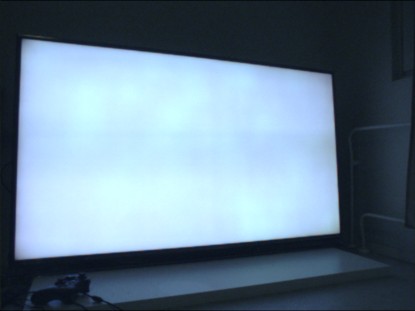

[Pekka Väänänen] has a Panasonic TV with a broken backlight that creates an uneven pink/green color. While it isn’t a huge deal for most films, black-and-white films tend to show the most effect. So, by modeling the distortion as a function, [Pekka] set out to find an inverse function that corrects the distortion before it gets to the TV.

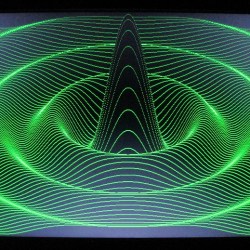

However, the backlight doesn’t emit enough light for some colors, which means the blue and green channels need to be dimmed. As mentioned earlier, the distortion isn’t even, so the distortion needs to be captured and then calculated.

However, the backlight doesn’t emit enough light for some colors, which means the blue and green channels need to be dimmed. As mentioned earlier, the distortion isn’t even, so the distortion needs to be captured and then calculated.

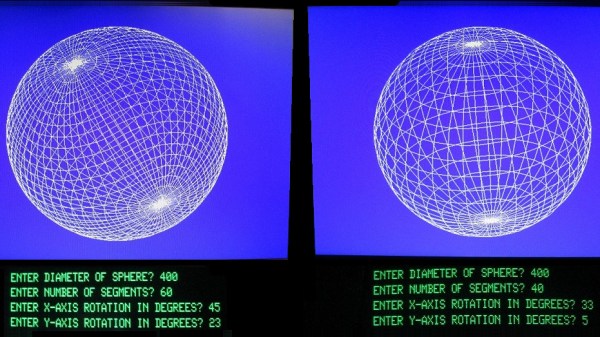

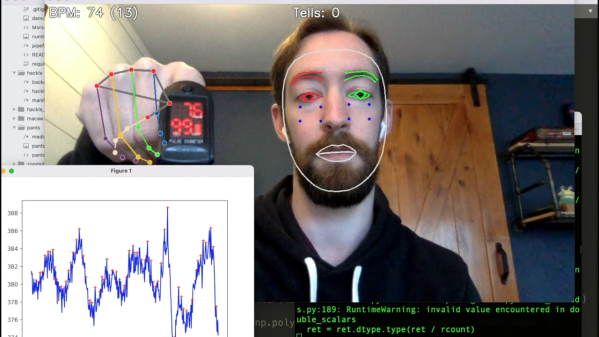

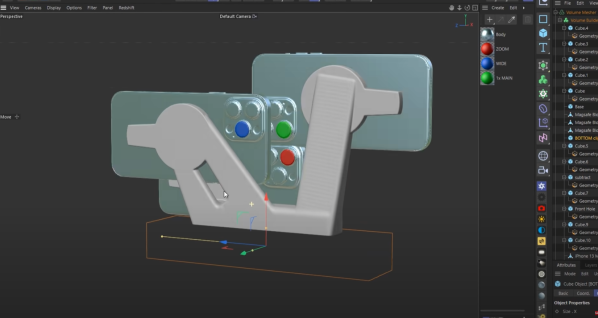

He took a few pictures with his phone, corrected the perspective, and applied a blur. The camera also has some distortion but works as a first approximation, but that isn’t something he covered here. Next, he set up a webcam and pointed it at the TV, trying to find good gain and offset values with a bit of Python.

Now it just becomes a problem of minimizing the per-pixel difference. Ultimately he just went for a random approach rather than an annealing or hill-climbing approach. Now that he had a function to apply, it was just a matter of adding a custom shader to his video player, which includes a live shader editor. He had to hack in support for an external texture, but he is kind enough to include the shader code and the patch in the article.

The result is excellent, and it’s a great use for an old TV. But perhaps, in some cases, it might be worth replacing the backlight entirely.