If you want to take good photographs, you need good light. Luckily for us, you can get reels and reels of LEDs from China for pennies, power supplies are ubiquitous, and anyone can solder up a few LED strips. The missing piece of the puzzle is a good enclosure for all these LEDs, and a light diffuser.

[Eric Strebel] recently needed a softbox for some product shots, and came up with this very cheap, very good lighting solution. It’s made from aluminum so it should handle the rigors of photography, and it’s absolutely loaded with LEDs to get all that light on the subject.

The metal enclosure for this softbox is constructed from sheet aluminum that’s about 22 gauge, and folded on a brake press. This is just about the simplest project you can make with a brake and a sheet of metal, with the tabs of the enclosure held together with epoxy. The mounting for this box is simply magnets super glued to the back meant to attach to a track lighting fixture. The 5000 K LED strips are held onto the box with 3M Super 77 spray adhesive, and with that the only thing left to do is wire up all the LED strips in series.

But without some sort of diffuser, this is really only a metal box with some LEDs thrown into the mix. To get an even cast of light on his subject, [Eric] is using drawing vellum attached to the metal frame with white glue. The results are fairly striking, and this is an exceptionally light and sturdy softbox for photography.

Continue reading “Building A Lightweight Softbox For Better Photography”

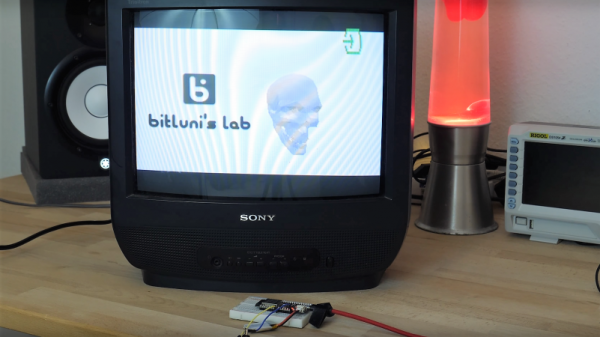

Now we all know that CRTs draw one pixel at a time, drawing from left to right, top to bottom. You can capture this with a regular still camera at a high shutter speed. The light from a TV screen comes from a phosphor coating painted on the inside of the glass screen. Phosphor glows for some time after it is excited, but how long exactly? [Gavin and Dan’s] high framerate camera let them observe the phosphor staying illuminated for only about 6 lines before it started to fade away. You can see this effect at a relatively mundane 2500 FPS.

Now we all know that CRTs draw one pixel at a time, drawing from left to right, top to bottom. You can capture this with a regular still camera at a high shutter speed. The light from a TV screen comes from a phosphor coating painted on the inside of the glass screen. Phosphor glows for some time after it is excited, but how long exactly? [Gavin and Dan’s] high framerate camera let them observe the phosphor staying illuminated for only about 6 lines before it started to fade away. You can see this effect at a relatively mundane 2500 FPS.