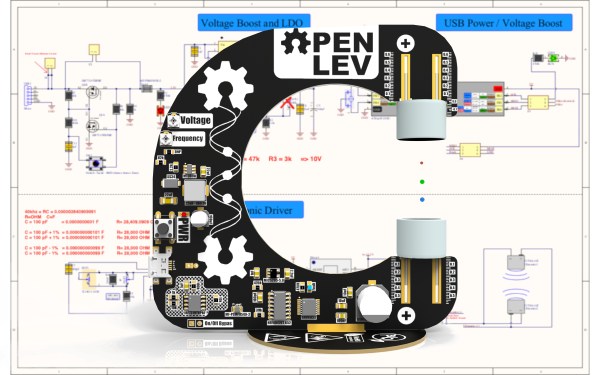

Printed circuit boards used to be green or tan, and invariably hidden. Now, they can be artful, structural, and like electronic convention badges, they are the entire project. In this vein, we find Open LEV, a horseshoe-shaped desktop bauble bristling with analog circuitry supporting an acoustic levitator. [John Loefler] is a mechanical engineer manager at a college 3D printing lab in Florida, so of course, he needs to have the nerdiest stuff on his workspace. Instead of resorting to a microcontroller, he filled out a parts list with analog components. We have to assume that the rest of his time went into making his PCB show-room ready. Parts of the silkscreen layer are functional too. If you look closely at where the ultrasonic transducers (silver cylinders) connect, there are depth gauges to aid positioning. Now that’s clever.

analog182 Articles

An Analog IC Design Book Draft

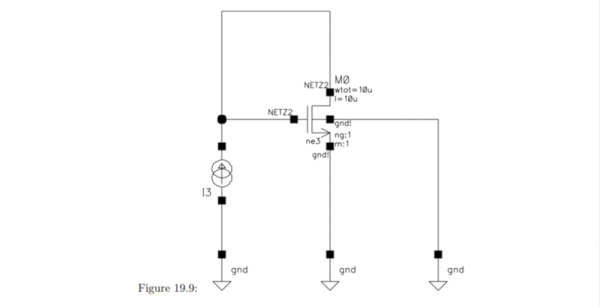

[Jean-Francois Debroux] spent 35 years designing analog ASICs. He’s started a book and while it isn’t finished — indeed he says it may never be — the 180 pages he posted on LinkedIn are a pretty good read.

The 46 sections are well organized, although some are placeholders. There are sections on design flow and the technical aspects of design. Examples range from a square root circuit to a sigma-delta modulator, although some of them are not complete yet. There are also sections on math, physics, common electronics, materials, and tools.

Analog Noise Generator, Fighter Of Other Noises

A chaotic drone of meaningless sound to lull the human brain out of its usual drive to latch on to patterns can at times be a welcome thing. A nonsense background din — like an old television tuned to a dead channel — can help drown out distractions and other invading sounds when earplugs aren’t enough. As [mitxela] explains, this can be done with an MP3 file of white noise, and that is a solution that works perfectly well for most practical purposes. However he found himself wanting a more refined hardware noise generator with analog controls to fine tune the output, and so the Rumbler was born.

The Rumbler isn’t just a white noise generator. White noise has a flat spectrum, but the noise from the Rumbler is closer to Red or Brownian Noise. The different colors of noise have specific definitions, but the Rumbler’s output is really just white noise that has been put through some low pass filters to create an output closer to a nice background rumble that sounds pleasant, whereas white noise is more like flat static.

Why bother with doing this? Mainly because building things is fun, but there is also the idea that this is better at blocking out nuisance sounds from neighboring human activities. By the time distant music (or television, or talking, or shouting) has trickled through walls and into one’s eardrums, the higher frequencies have been much more strongly attenuated than the lower frequencies. This is why one can easily hear the bass from a nearby party’s music, but the lyrics don’t survive the trip through walls and windows nearly as well. The noise from the Rumbler is simply a better fit to those more durable lower frequencies.

[Mitxela]’s writeup has quite a few useful tips on analog design and prototyping, so give it a read even if you’re not planning to make your own analog noise box. Want to hear the Rumbler for yourself? There’s an embedded audio sample near the bottom of the page, so go check it out.

For a truly modern application of white noise, check out the cone of silence for snooping smart speakers.

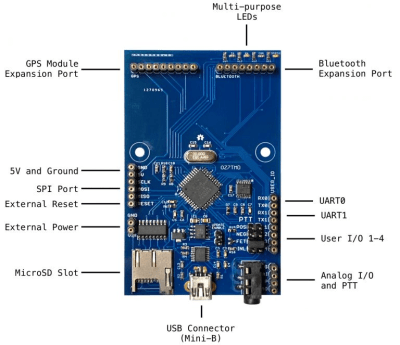

An Open Hardware Modem For The Modern Era

Readers of a certain age will no doubt remember the external modems that used to sit next to their computers, with the madly flashing LEDs and cacophony of familiar squeals announcing your impending connection to a realm of infinite possibilities. By comparison, connecting to the Internet these days is about as exciting as flicking on the kitchen light. Perhaps even less so.

But while we don’t use them to connect our devices to the Internet anymore, that doesn’t mean the analog modem is completely without its use. The OpenModem by [Mark Qvist] is an open hardware and software audio frequency-shift keying (AFSK) modem that recalls some of the charm (and connection speeds) of those early devices.

It’s intended primarily for packet radio communications, and as such is designed to tie into a radio’s Push-to-Talk functionality with a standard 3.5 mm jack connector. Support for AES-128 encryption means it will take a bit more than an RTL-SDR to eavesdrop on your communications. Though if you’re really worried about others listening in, the project page says you could even use the OpenModem over a wired connection as you would have in the old days.

It’s intended primarily for packet radio communications, and as such is designed to tie into a radio’s Push-to-Talk functionality with a standard 3.5 mm jack connector. Support for AES-128 encryption means it will take a bit more than an RTL-SDR to eavesdrop on your communications. Though if you’re really worried about others listening in, the project page says you could even use the OpenModem over a wired connection as you would have in the old days.

If you just want a simple and reliable way to get a secure AFSK communication link going, the OpenModem looks like it would be a great choice. But more than that, it offers a compelling platform for learning and experimentation. The hardware is compatible with the Arduino IDE, so you can even write your own firmware should you want to spin up your own take on this classic communications device.

The OpenModem is the evolution of the MicroModem that [Mark] developed years ago, and it’s clear that the project has come a long way since then. Of course, if you’re more about the look than the underlying technology, you could always just put a WiFi access point into the case of an old analog modem.

[Thanks to Boofdas for the tip.]

Receive Analog Video Radio Signals From Scratch

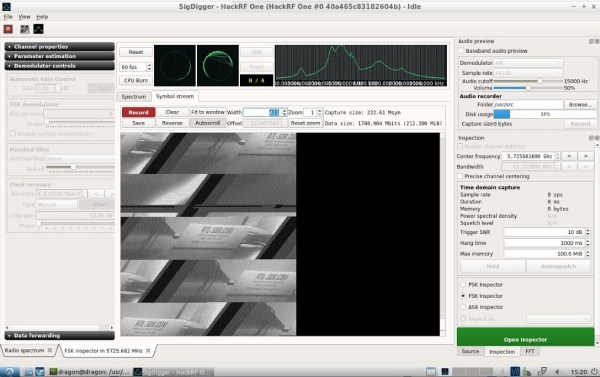

If you’ve been on the RTL-SDR forums lately you may have seen that a lot of work has been going into the DragonOS software. This is a software-defined radio group that has seen a lot of effort put into a purpose-built Debian-based Linux distribution that can do a lot of SDR out of the box. The latest and most exciting project coming from them involves a method for using the software to receive and demodulate analog video.

[Aaron]’s video (linked below) demonstrates using a particular piece of software called SigDigger to analyze an incoming analog video stream from a drone using a HackRF. (Of course any incoming analog signal could be used, it doesn’t need to be a drone.) The software shows the various active frequency ranges, allows a user to narrow in on one and then start demodulating it. While it has to be dialed in just right to get anything that doesn’t look like snow, [Aaron] is able to get recognizable results in just a few minutes.

Getting something like this to work completely in software is an impressive feat, especially considering that all of the software used here is free. Granted, this wouldn’t be as easy for a digital signal like most TV stations broadcast, but there’s still a lot of fun to be had. In case you missed the release of DragonOS, we covered it a few weeks ago and it’s only gotten better since then, with this project just as one example.

Continue reading “Receive Analog Video Radio Signals From Scratch”

Matrix Of Resistors Forms The Hot Hands Behind This Thermochromic Analog Clock

If you’re going to ditch work, you might as well go big. A 1,024-pixel thermochromic analog clock is probably on the high side of what most people would try, but apparently [Daniel Valuch] really didn’t want to go to work that day.

The idea here is simple: heat up a resistor by putting some current through it, lay a bit of thermochromic film over it, and you’ve got one pixel. The next part was not so simple: expanding that single pixel to a 32 by 32 matrix.

To make each pixel square-ish, [Daniel] chose to pair up the 220-ohm SMD resistors for a whopping 2,048 components. Adding to the complexity was the choice to drive them with a 1,024-bit shift register made from discrete 74LVC1G175 flip flops. With the Arduino Nano and all the other support components, that’s over 3,000 devices with the potential to draw 50 amps, were someone to be foolish or unlucky enough to turn on every pixel at once. Luckily, [Daniel] chose to emulate an analog clock here; that led to additional problems, like dealing with cool-down lag in the thermochromic film when animating the hands, which had to be dealt with in software.

We’ve seen other thermochromic displays before, including recently with this temperature and humidity display. This one may not be the highest resolution display out there, but it’s big and bold and slightly dangerous, and that makes it a win in our book.

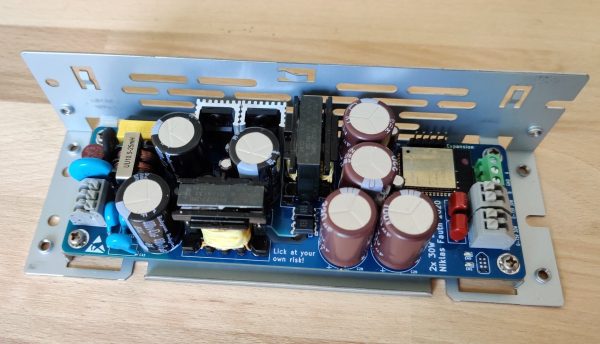

Go The Extra Mile For Your LED Driver

Addressable RGB LED strips may be all the rage, but that addressability can come at a cost. If instead of colors you expect to show shades of white you may the find less flickery, wider spectrum light from a string of single color LEDs and a nice supply desirable. Of course there are many ways to drive such a strip but this is Hackaday, not Aliexpressaday (though we may partake in the sweet nectar of e-commerce). [Niklas Fauth] must have really had an itch to scratch, because to get the smoothest fades for his single color LED strips, he built an entire software defined dual 50W switched-mode AC power supply from scratch. He calls it his “first advanced AC design” and we are suitably impressed.

Switched-mode power supplies are an extremely common way of converting arbitrary incoming AC or DC voltage into a DC source. A typical project might use a fully integrated solution in the form of a drop-in module or wall wart, or a slightly less integrated controller IC and passives. But [Niklas] went all the way and designed his from scratch. Providing control he has the ubiquitous ESP-32 to drive the control nodes of the supply and giving the added bonus of wireless connectivity (one’s blinkenlights must always be orchestrated). We can’t help but notice the PCBA also exposes RS485 and CAN transceivers which seem to be unused so far, perhaps for a future expansion into wired control?