“The brickings will continue until the printer sales improve!” This whole printer-bricking thing seems to be getting out of hand with the news this week that a firmware update caused certain HP printers to go into permanent paper-saver mode. The update was sent to LaserJet MFP M232-M237 models (opens printer menu; checks print queue name; “Phew!) on March 4, and was listed as covering a few “general improvements and bug fixes,” none of which seem very critical. Still, some users reported not being able to print at all after the update, with an error message suggesting printing was being blocked thanks to non-OEM toner. This sounds somewhat similar to the bricked Brother printers we reported on last week (third paragraph).

container11 Articles

Putting A Pi In A Container

Docker and other containerization applications have changed a lot about the way that developers create new software as well as how they maintain virtual machines. Not only does containerization reduce the system resources needed for something that might otherwise be done in a virtual machine, but it standardizes the development environment for software and dramatically reduces the complexity of deploying on different computers. There are some other tricks up the sleeves as well, and this project called PI-CI uses Docker to containerize an entire Raspberry Pi.

The Pi container emulates an entire Raspberry Pi from the ground up, allowing anyone that wants to deploy software on one to test it out without needing to do so on actual hardware. All of the configuration can be done from inside the container. When all the setup is completed and the desired software installed in the container, the container can be converted to an .img file that can be put on a microSD card and installed on real hardware, with support for the Pi models 3, 4, and 5. There’s also support for using Ansible, a Docker automation system that makes administering a cluster or array of computers easier.

Docker can be an incredibly powerful tool for developing and deploying software, and tools like this can make the process as straightforward as possible. It does have a bit of a learning curve, though, since sharing operating system tools instead of virtualizing hardware can take a bit of time to wrap one’s mind around. If you’re new to the game take a look at this guide to setting up your first Docker container.

A Guide To Running Your First Docker Container

While most of us have likely spun up a virtual machine (VM) for one reason or another, venturing into the world of containerization with software like Docker is a little trickier. While the tools Docker provides are powerful, maintain many of the benefits of virtualization, and don’t use as many system resources as a VM, it can be harder to get the hang of setting up and maintaining containers than it generally is to run a few virtual machines. If you’ve been hesitant to try it out, this guide to getting a Docker container up and running is worth a look.

The guide goes over the basics of how Docker works to share system resources between containers, including some discussion on the difference between images and containers, where containers can store files on the host system, and how they use networking resources. From there the guide touches on installing Docker within a Debian Linux system. But where it really shines is demonstrating how to use Docker Compose to configure a container and get it running. Docker Compose is a file that configures a number of containers and their options, making it easy to deploy those containers to other machines fairly straightforward, and understanding it is key to making your experience learning Docker a smooth one.

While the guide goes through setting up a self-hosted document management program called Paperless, it’s pretty easy to expand this to other services you might want to host on your own as well. For example, the DNS-level ad-blocking software Pi-Hole which is generally run on a Raspberry Pi can be containerized and run on a computer or server you might already have in your home, freeing up your Pi to do other things. And although it’s a little more involved you can always build your own containers too as our own [Ben James] discussed back in 2018.

Organizational Inspiration From The Discount Tool Company

When in need of any tool to get a job done quickly, or only for a small number of times, it’s great to have a local “discount tool” company locally for some working, yet often low-quality solution to whatever problem might arise. While there are some gems, most of these tools won’t last through heavy, sustained use like their more expensive counterparts will. On the other hand, there are other things to be had at these discount shops, such as inspiration for tackling a storage problem.

This particular storage system comes from Harbor Freight, and uses a set linked crosshairs, the center of which is hollowed out. A set of movable compartments sits on top with feet that can interlock inside the crosshairs. This allows much more efficient use of space within the toolboxes, but [Alan] wanted it to be useful for more that that. He designed and implemented the Storage Case Base Template (SCBT) which allows for a container of any size to be fitted with a similar crosshair network.

With this non-proprietary system implemented and printed, the original goal of reducing the clutter in [Alan]’s workspace was accomplished. The 3D printing files can be modified easily for any space, and are available both on Thingiverse and Printables. For some other ways of packing a lot into a small space, we featured this tiny workshop a while back that’s packed with storage hacks.

Automate The Freight: Autonomous Ships Look For Their Niche

It is by no means an overstatement to say that life as we know it would grind to a halt without cargo ships. If any doubt remained about that fact, the last year and a half of supply chain woes put that to bed; we all now know just how much of the stuff we need — and sadly, a lot of the stuff we don’t need but still think we do — comes to us by way of one or more ocean crossings, on vessels specialized to carry everything from shipping containers to bulk liquid and solid cargo.

While the large and complex vessels that form the backbone of these globe-spanning supply chains are marvelous engineering achievements, they’re still utterly dependent on their crews to make them run efficiently. So it’s not at all surprising to learn that some shipping lines are working on ways to completely automate their cargo ships, to reduce their exposure to the need for human labor. On paper, it seems like a great idea — unless you’re a seafarer, of course. But is it a realistic scenario? Will shipping companies realize the savings that they apparently hope for by having fleets of unmanned cargo vessels plying the world’s oceans? Is this the right way to automate the freight?

Continue reading “Automate The Freight: Autonomous Ships Look For Their Niche”

Hackaday Links: August 1, 2021

Amateur radio operators have a saying: When all else fails, there’s ham radio. And that’s true, at least to an extent — knock out the power, tear down the phone lines, and burn up all the satellites in orbit, and there will still be hams talking about politics on 40 meters. The point is, as long as the laws of physics don’t change, hams will figure out a way to send and receive messages. In honor of that fact, the police in the city of Pune in Maharashtra, India, make it a point to exchange messages with their headquarter using Morse code once a week. The idea is to maintain a backup system, in case they can’t get a message through any other way. It’s a good idea, especially since they rotate all their radio operators through the Sunday morning ritual. We can’t imagine that most emergency services dispatchers would be thrilled about learning Morse, though.

Just because you’re a billionaire with a space company doesn’t mean you’re an astronaut. At least that’s the view of the US Federal Aviation Administration, which issued guidelines pretty much while Jeff Bezos and his merry band of cohorts were floating about above the 100-km high Kármán line in a Blue Origin “New Shepard” rocket. The FAA guidelines make it clear that those making the trip need to have actually done something to qualify as an astronaut, by “demonstrated activities during flight that were essential to public safety, or contributed to human space flight safety.” That’s good news to the “Old Shepard”, who clearly was in control of “Freedom 7” during the Mercury program. But the Bezos brothers, teenager Oliver Daemen, and Wally Funk, one of the “Mercury 13” group of women who trained to be NASA astronauts but never got to fly, were really just along for the ride, as the entire flight was automated. It doesn’t take away from the fact that they’ve been to space and you haven’t, of course, but they can’t officially call themselves astronauts. This goes to show that even billionaires can just be ballast too.

Good news, everyone — if you had anything that was being transported aboard the Ever Given, your stuff is almost there. The Suez Canal-occluding container ship finally made it to its original destination in Rotterdam, approximately four months later than originally predicted. After plugging up the vital waterway for six days last March, the ship along with her cargo and her crew were detained in Egypt’s Great Bitter Lake, perhaps the coolest sounding body of water in the world next to the Dead Sea. Legal squabbling ensued at that point, all the while rendering whatever was in the 20,000-odd containers aboard the ship pretty much pointless. We’d imagine that even with continuous power, whatever was in the refrigerated containers must be pretty nasty by now, so there’s probably a lot of logistics and clean-up left to sort out.

I have to admit that I have a weird love of explosive bolts. I don’t know what it is, but the idea of fasteners engineered to fail in a predictable way under the influence of pyrotechnic charges just tickles something in me. I mean, I even wrote a whole article on the subject once. So when I came across this video explaining how the Space Shuttles were held to the launch pad, I really had to watch it. Surprisingly, the most interesting part of this story was not the explosive aspect, but the engineering problem of supporting the massive vehicle on the launch pad. For as graceful as the Shuttles seemed once they got into orbit, they really were ungainly beasts, especially strapped to the external fuel tank and booster. The scale of the eight frangible nuts used to secure the boosters to the pad is just jaw-dropping. We also liked the idea that NASA decided to catch the debris from the explosions in a container filled with sand.

Cloud-Based Atari Gaming

While the Google Stadia may be the latest and greatest in the realm of cloud gaming, there are plenty of other ways to experience this new style of gameplay, especially if you’re willing to go a little retro. This project, for example, takes the Atari 2600 into the cloud for a nearly-complete gaming experience that is fully hosted in a server, including the video rendering.

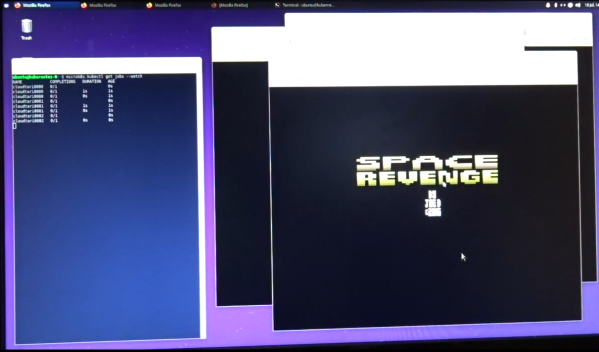

[Michael Kohn] created this project mostly as a way to get more familiar with Kubernetes, a piece of open-source software which helps automate and deploy container-based applications. The setup runs on two Raspberry Pi 4s which can be accessed by pointing a browser at the correct IP address on his network, or by connecting to them via VNC. From there, the emulator runs a specific game called Space Revenge, chosen for its memory requirements and its lack of encumbrance of copyrights. There are some limitations in that the emulator he’s using doesn’t implement all of the Atari controls, and that the sound isn’t available through the remote desktop setup, but it’s impressive nonetheless

[Michael] also glosses over this part, but the Atari emulator was written by him “as quickly as possible” so he could focus on the Kubernetes setup. This is impressive in its own right, and of course he goes beyond this to show exactly how to set up the cloud-based system on his GitHub page as well. He also thinks there’s potential for a system like this to run an NES setup as well. If you’re looking for something a little more modern, though, it is possible to set up a cloud-based gaming system with a Nintendo Switch as well.