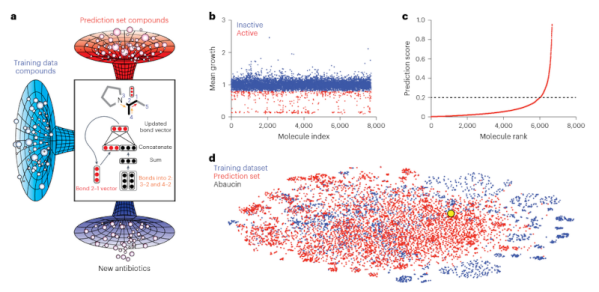

Researchers in Canada and the United States have used deep learning to derive an antibiotic that can attack a resistant microbe, acinetobacter baumannii, which can infect wounds and cause pneumonia. According to the BBC, a paper in Nature Chemical Biology describes how the researchers used training data that measured known drugs’ action on the tough bacteria. The learning algorithm then projected the effect of 6,680 compounds with no data on their effectiveness against the germ.

In an hour and a half, the program reduced the list to 240 promising candidates. Testing in the lab found that nine of these were effective and that one, now called abaucin, was extremely potent. While doing lab tests on 240 compounds sounds like a lot of work, it is better than testing nearly 6,700.

Interestingly, the new antibiotic seems only to be effective against the target microbe, which is a plus. It isn’t available for people yet and may not be for some time — drug testing being what it is. However, this is still a great example of how machine learning can augment human brainpower, letting scientists and others focus on what’s really important.

WHO identified acinetobacter baumannii as one of the major superbugs threatening the world, so a weapon against it would be very welcome. You can hope that this technique will drastically cut the time involved in developing new drugs. It also makes you wonder if there are other fields where AI techniques could cull out alternatives quickly, allowing humans to focus on the more promising candidates.

Want to catch up on machine learning algorithms? Google can help. Or dive into an even longer course.