For many of us who were in college at the time, the 1989 release of Will Wright’s classic SimCity sounded the death knell of our GPAs. Being able to create virtual worlds and then smite them with a tornado or a kaiju attack was the stuff of a procrastinator’s dreams. We always liked the industrial side of the game best, and took great pains in laying out the factory zones, power plants, and seaports. Those of a similar bent will be happy to know that Maxis, the studio behind the game, had a business simulations division, and one of their products was a complete refinery simulator the studio built for Chevron called, unsurprisingly, SimRefinery. The game, which bears a striking resemblance to SimCity, has been recovered and is now available for download, which means endless procrastination by playing virtual petrochemical engineer is only a mouse click away.

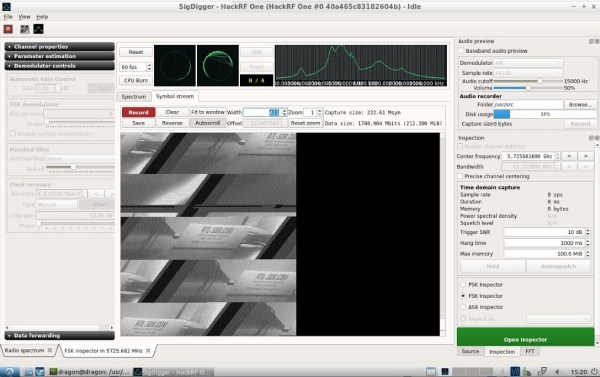

Speaking of time wasters, we stumbled upon another simulation this week that sucked away a couple of hours of productivity. As RTL-SDR.com reports, YouTuber called Information Zulu has a 24/7 live stream showing arrivals and departures at Los Angeles International Airport. That may sound boring, but the cameras used to watch the runways are virtual, and the planes are animated based on ADS-B data being scooped up by an RTL-SDR dongle. We pinged Information Zulu and asked for a rundown of the gear behind the system, but never heard back. If we do, we’ll post a full article on what we learned, because the level of detail is amazing. The arriving and departing planes sport the correct livery for the airline, the current weather conditions are shown, taxiing is shown in real time, and there’s even an audio feed from air traffic control.

If you’re looking to gain back a little of the productivity lost to the last two items, Digi-Key might be able to help with their new PCB Builder service. All you have to do is upload your gerbers and select your materials, and they’ll give you options for a bunch of different quick-turn fabrication houses. Looks mighty convenient.

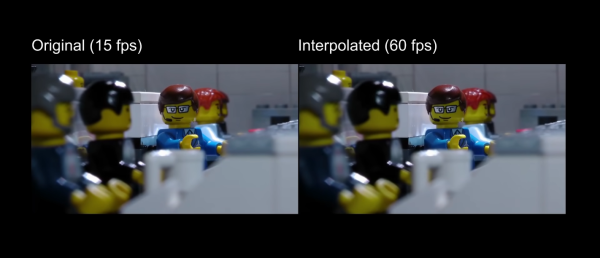

Steve Mould dropped a video this week about vibration analysis. That might not sound very exciting, but the fascinating bit is how companies are now using motion amplification video techniques to show how and where industrial equipment is moving, even if those motions are too subtle to be seen by the naked eye. It’s frankly terrifying to see how pipes flex and tanks expand and contract, and how pumps and motors move relative to each other. The technique used is similar to the way a person’s pulse can be detected on a video by the subtle color change as blood rushes into capillaries. We’d love to see someone tackle a homebrew version of this so we can all see what’s going on around us.

And finally, we want to remind everyone that the Hackaday Prize is back, and that you should get your entries going. What’s new this year is the Dream Team challenges, where four worthy non-profits organizations will each assemble a three-person team to work on a specific pain-point in their process. The application deadline has been extended to June 9, and there are two $3,000 microgrants, one in June and one in July, for each team member. So look through the design briefs and see if your skills match their needs.