If the idea of reading a physical book sounds like hard work, [Nick Bild’s] latest project, the PageParrot, might be for you. While AI gets a lot of flak these days, one thing modern multimodal models do exceptionally well is image interpretation, and PageParrot demonstrates just how accessible that’s become.

[Nick] demonstrates quite clearly how little code is needed to get from those cryptic black and white glyphs to sounds the average human can understand, specifically a paltry 80 lines of Python. Admittedly, many of those lines are pulling in libraries, and some are just blank, so functionally speaking, it’s even shorter than that. Of course, the whole application is mostly glue code, stitching together other people’s hard work, but it’s still instructive and fun to play with.

The hardware required is a Raspberry Pi Zero 2 W, a camera (in this case, a USB webcam), and something to hold it above the book. Any Pi with the ability to connect to a camera should also work, however, with just a little configuration.

On the software side, [Nick] pulls in the CV2 library (which is the interface to OpenCV) to handle the camera interfacing, programming it to full HD resolution. Google’s GenAI is used to interface the Gemini 2.5 Flash LLM via an API endpoint. This takes a captured image and a trivial prompt, and returns the whole page of text, quick as a flash.

Finally, the script hands that text over to Piper, which turns that into a speech file in WAV format. This can then be played to an audio device with a call out to the console aplay tool. It’s all very simple at this level of abstraction.

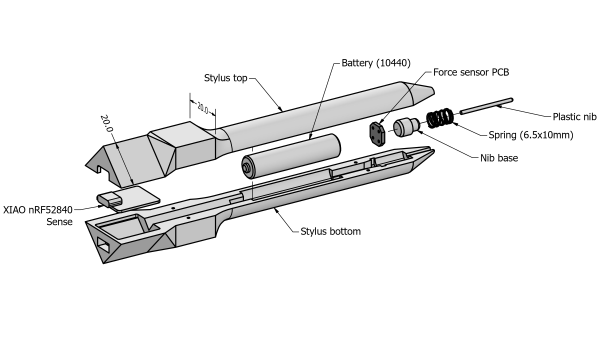

stylus. A consumer-grade (i.e. uncalibrated) webcam is all that is required on the hardware side. The software utilizes the familiar OpenCV stack to unroll the effects of the webcam

stylus. A consumer-grade (i.e. uncalibrated) webcam is all that is required on the hardware side. The software utilizes the familiar OpenCV stack to unroll the effects of the webcam