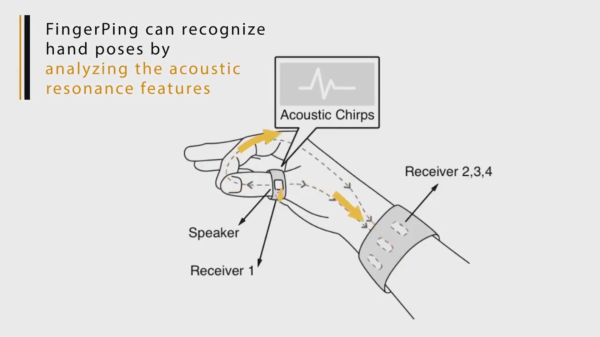

Sonar measures distance by emitting a sound and clocking how long it takes the sound to travel. This works in any medium capable of transmitting sound such as water, air, or in the case of FingerPing, flesh and bone. FingerPing is a project at Georgia Tech headed by [Cheng Zhang] which measures hand position by sending soundwaves through the thumb and measuring the time on four different receivers. These readings tell which bones the sound travels through and allow the device to figure out where the thumb is touching. Hand positions like this include American Sign Language one through ten.

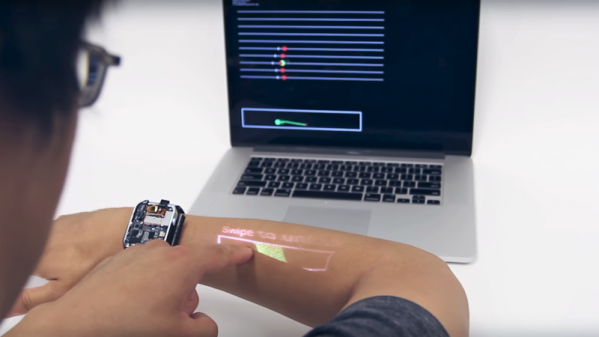

From the perspective of discreetly one through ten on a mobile device, this opens up a lot of possibilities for computer input while remaining pretty unobtrusive. We see prototypes which are more capable of reading gestures but also draw attention if you wear them on a bus. It is a classic trade-off between convenience and function but this type of reading is unique and could combine with other bio signals for finer results.