Gesture control is a technology that has floated around for quite a while, but never quite reached mainstream acceptance. Wii Bowling was fun for a while, but we’re not regularly using gestures to open doors or order pizza just yet. Doing it yourself can be quite easy, however, as [RC Lover san] found with a barebones, hacky build.

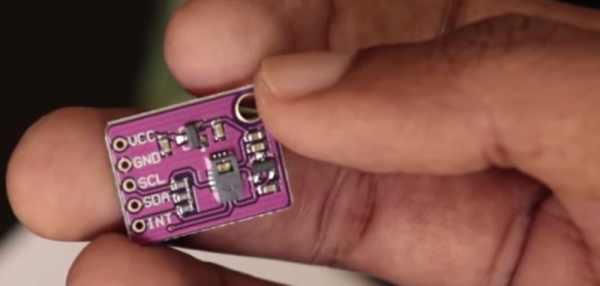

Typically, when we think of gesture control, we envisage object tracking cameras or MEMS accelerometers. Instead, this build uses simple tilt switches, as you might find in a pinball machine from days of yore. Four of these are placed on a wrist-mounted device, allowing the user to tilt their arm to move an RC car in different directions. The tilt switches are easy to hack into the controller for a toy RC car, as they simply replace the existing buttons on the PCB.

It’s a project that goes to show that not everything has to be done with advanced sensors and complex algorithms. Sometimes, it can all be done with a handful of cheap switches and some ingenuity. Plus, using arm movements to scoot BB-8 around on the floor looks like great fun. We’ve seen other attempts to build simple gesture controls with pots, too. Video after the break.

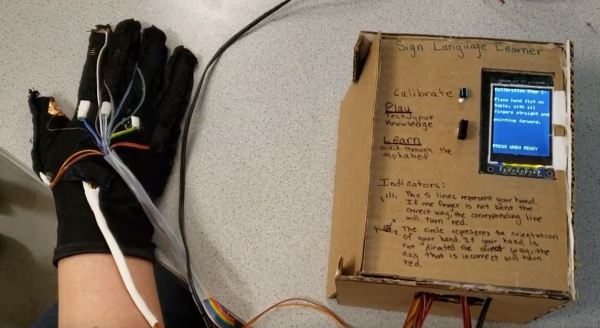

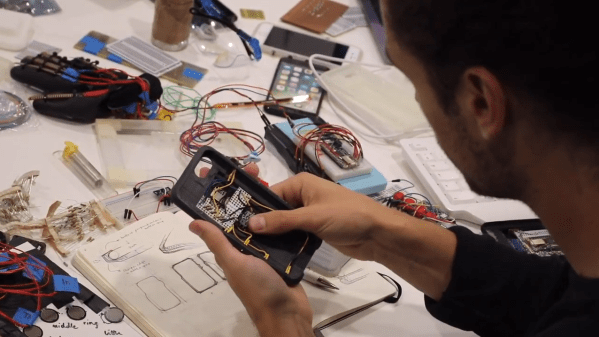

Smartphones and other modern computing devices are wonderful things, but for those with disabilities interacting with them isn’t always easy. In trying to improve accessibility, [Dougie Mann] created

Smartphones and other modern computing devices are wonderful things, but for those with disabilities interacting with them isn’t always easy. In trying to improve accessibility, [Dougie Mann] created