If you enjoy watching skilled assembly of small mechanical systems with electronics to match, then make some time to watch [Max Imagination] transform a Hot Wheels car into a 1/64th scale RC car complete with video FPV video feed. To say the project took careful planning and assembly would be an understatement, and the results look great.

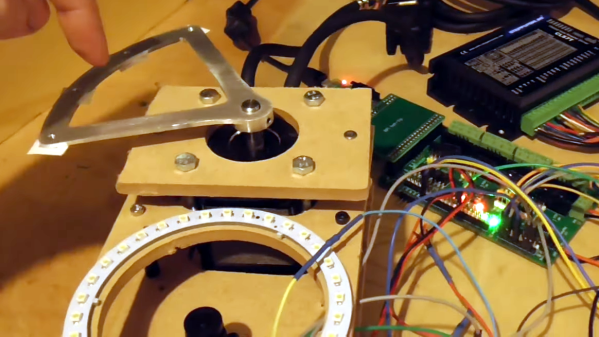

The sort of affordable electronics available to hobbyists today opens up all kinds of possibilities, but connecting up various integrated modules brings its own challenges. This is especially true when there are physical constraints such as fitting everything into an off-the-shelf 1/64 scale toy car.

There are a lot of interesting build details that [Max] showcases, such as rebuilding a tiny DC motor to have a longer shaft so that it can drive both wheels at once. We also liked the use of 0.2 mm thick nickel strips (intended for connecting cells in a battery pack) as compliant structural components.

There are a lot of interesting build details that [Max] showcases, such as rebuilding a tiny DC motor to have a longer shaft so that it can drive both wheels at once. We also liked the use of 0.2 mm thick nickel strips (intended for connecting cells in a battery pack) as compliant structural components.

There are actually two web servers being run on the car. One provides an interface for throttle and steering (here’s the code it uses), and the other takes care of the video feed with ESP32-CAM sending a motion jpeg stream. [Max]’s mobile phone is used to control the car, and a second device goes into an old phone-based VR headset to display the FPV video feed.

Circuit diagrams and code are available for anyone wanting to perhaps make a similar project. We’ve seen micro RC builds of high quality before, but integrating an FPV camera kicks things up a notch. Want even more complex builds? All the rules change when weight reduction is a non-negotiable #1 priority. Check out a micro RC plane that weighs under three grams and get a few new ideas.

Continue reading “Hot Wheel Car Becomes 1/64 Scale Micro RC Car, Complete With Camera”