Most Hackaday readers will have heard of [Clive Sinclair], the British inventor and serial entrepreneur whose name appeared on some of the most fondly-recalled 8-bit home computers. If you aren’t either a Sinclair enthusiast or a Brit of a Certain Age though, you may not also be aware that he dabbled for a while in the world of electric vehicles. In early 1985 he launched the C5, a sleek three-wheeler designed to take advantage of new laws governing electrically assisted bicycles.

The C5 was a commercial failure because it placed the rider in a vulnerable position almost at road level, but in the decades since its launch it has become something of a cult item. [Rob] fell for the C5 when he had a ride in one belonging to a friend, and decided he had to have one of his own. The story of his upgrading it and the mishaps that befell it along the way are the subject of his most recent blog post, and it’s not a tale that’s over by any means.

The C5 was flawed not only in its riding position, the trademark Sinclair economy in manufacture manifested itself in a minimalist motor drive to one rear wheel only, and a front wheel braking system that saw bicycle calipers unleashed on a plastic wheel rim. The latter was sorted with an upgrade to a disc brake, but the former required a bit more work. A first-generation motor and gearbox had an unusual plywood housing, and the C5 even made it peripherally into our review of EMF Camp 2016, but it didn’t quite have the power to start the machine without pedaling. Something with more grunt was called for, and it came in the form of a better gearbox which once fitted allowed the machine to power its way to the Tindie Cambridge meetup back in April. Your scribe had a ride, but all was not well. After a hard manual pedal back across Cambridge to the Makespace it was revealed that the much-vaunted Lotus chassis had lived up to the Sinclair reputation for under-engineering, and bent. Repairs are under way for the upcoming EMF Camp 2018, where we hope we’ll even see it entering the Hacky Racers competition.

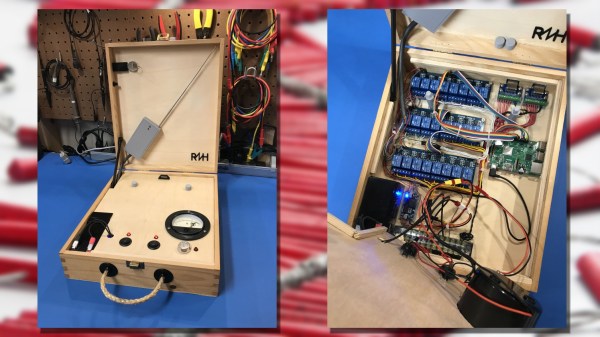

[netmagi] claims his yearly display is a modest affair, but this controller can address 24 channels, which would be a pretty big show in any neighborhood. Living inside an old wine box is a Raspberry Pi 3B+ and three 8-channel relay boards. Half of the relays are connected directly to breakouts on the end of a long wire that connect to the electric matches used to trigger the fireworks, while the rest of the contacts are connected to a wireless controller. The front panel sports a key switch for safety and a retro analog meter for keeping tabs on the sealed lead-acid battery that powers everything. [netmagi] even set the Pi up with WiFi so he can trigger the show from his phone, letting him watch the wonder unfold overhead. A few test shots are shown in the video below.

[netmagi] claims his yearly display is a modest affair, but this controller can address 24 channels, which would be a pretty big show in any neighborhood. Living inside an old wine box is a Raspberry Pi 3B+ and three 8-channel relay boards. Half of the relays are connected directly to breakouts on the end of a long wire that connect to the electric matches used to trigger the fireworks, while the rest of the contacts are connected to a wireless controller. The front panel sports a key switch for safety and a retro analog meter for keeping tabs on the sealed lead-acid battery that powers everything. [netmagi] even set the Pi up with WiFi so he can trigger the show from his phone, letting him watch the wonder unfold overhead. A few test shots are shown in the video below.

In this age of

In this age of