Aquariums are amazingly beautiful displays of vibrant ocean life, or at least they can be. For a lot of people aquariums become frustrating chemistry battle to keep the ecosystem heathly and avoid a scummy cesspool where no fish want to be.

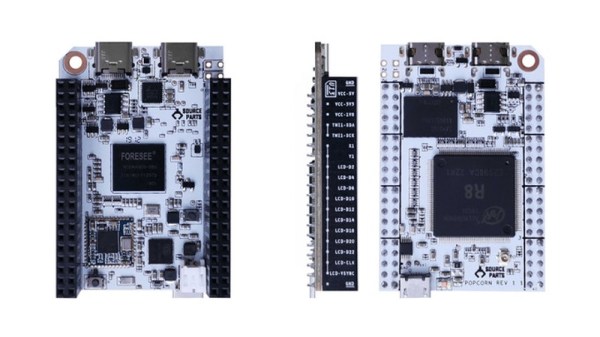

This hack sidesteps that problem, pulling off some of the most beautiful parts of a living aquarium, while keeping your gaming rig running nice and cool. That’s right, this tank is a cold mineral oil dip for a custom PC build.

It’s the second iteration [Frank Zhao] has built, with many improvements along the way. The first aquarium computer was shoe-horned inside of a very tiny aquarium — think the kind for Beta fish. It eventually developed a small crack that spread to a bigger one with a lot of mineral oil to clean up. Yuck. The new machine has a much larger tank and laser cut parts which is a step up from the hand-cut acrylic of the first version. This makes for a very nice top bezel that hangs the PC guts and provides unobtrusive input and output ports for the oil circulation. A radiator unit hidden out of sight cools the oil as it circulates through the system.

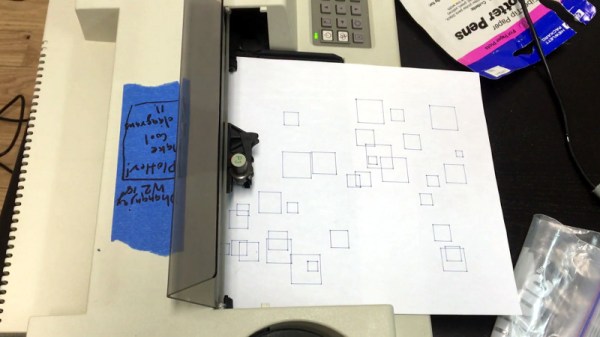

These are all nice improvements, but it’s the aesthetic of the tank itself that really make this one special. The first version was so cramped that a couple of sad plastic plants were the only decoration. But now the tank has the whole package, with coral, more realistic plants, a sunken submarine, and of course the treasure chest bubbler. Well done [Frank]!