There are a lot of cliches about the perils of boat ownership. “The best two days of a boat owner’s life are the day they buy their boat, and the day they sell it” immediately springs to mind, for example, but there is a loophole to an otherwise bottomless pit of boat ownership: building a small robotic speedboat instead of owning the full-size version. Not only will you save loads of money and frustration, but you can also use your 3D-printed boat as a base for educational and research projects.

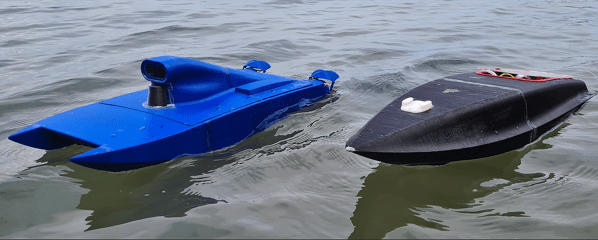

The autonomous speedboats have a modular hull design to make them easy to 3D print, and they use a waterjet for propulsion which improves their reliability in shallow waters and reduces the likelihood that they will get tangled on anything or injure an animal or human. The platform is specifically designed to be able to house any of a wide array of sensors to enable people to easily perform automated tasks in bodies of water such as monitoring for pollution, search-and-rescue, and various inspections. A monohull version with a single jet was prototyped first, but eventually a twin-hulled catamaran with two jets was produced which improved the stability and reliability of the platform.

All of the files needed to get started with your own autonomous (or remote-controlled) speedboat are available on the project’s page. The creators are hopeful that this platform suits a wide variety of needs and that a community is created of technology enthusiasts, engineers, and researchers working on autonomous marine robotic platforms. If you’d prefer to ditch the motor, though, we have seen a few autonomous sailboats used for research purposes as well.

Continue reading “Waterjet-Powered Speedboat For Fun And Research”