Those little pocket TVs were quite the cool gadget back in the ’80s and ’90s, but today they’re pretty much useless at least for their intended purpose of watching analog television. (If someone is out there making tiny digital-to-analog converter boxes for these things, please let us know.)

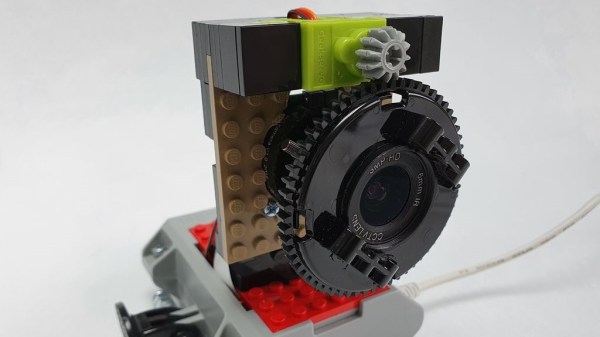

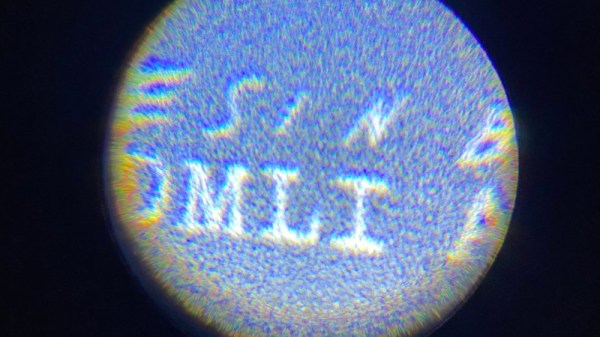

Now that analog pocket TVs are obsolete, they’re finally affordable enough for hacking into a useful tool like an inspection camera. [technichenews] found a nice Casio TV and a suitable analog pinhole camera that also does IR. Since the camera has RCA plugs and the TV’s video input is some long-gone proprietary 3.5mm cable, [technichenews] made a new video-only cable by soldering the yellow RCA wires up to the cable from an old pair of headphones. Power for the camera comes from a universal wall wart set to 12V.

Our favorite part of this project is the way that [technichenews] leveraged what is arguably the most useless part of the TV — the antenna — into the star. Their plan is to use the camera to peer into small engines, so by mounting it on the end of the antenna, it will become a telescoping, ball-jointed, all-seeing eye. You can inspect the build video after the break.

Need a faster, easier way to take a closer look without breaking the bank? We hear those slim earwax-inspection cameras are pretty good.

Continue reading “Pocket TV Now Shows The Inspection Channel 24/7”