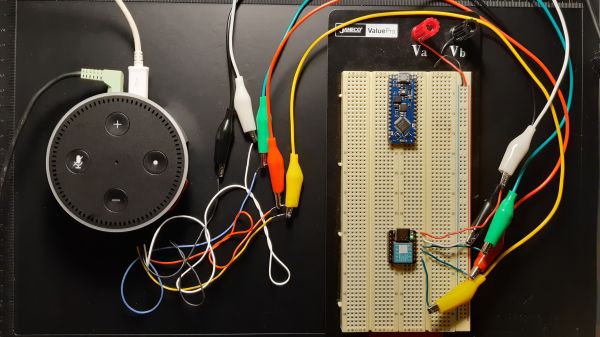

Voice controlled home assistants are the wonder of our age, once you’ve made peace with the privacy concerns of sharing the intimacies of your life with a data centre owned by a massive corporation, anyway. They provide a taste of how the future was supposed to be in those optimistic predictions of decades past: Alexa and Siri can crack jokes, control your lights, answer questions, tell you the news, and so much more.

But for all their electronic conversational perfection, your electronic pals can’t satisfy your most fundamental needs and bring you a beer. This is something [luisengineering] has fixed, an he’s provided the appropriate answer to the question “Alexa: bring mir ein bier!“. The video which we’ve also put below the break is in German with YouTube’s automatic closed captions if you want them, but we think you’ll be able to get the point of it if not all his jokes without needing to learn to speak a bit of Deutsch.

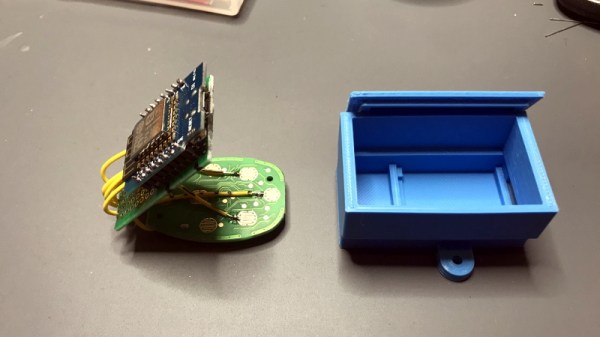

As he develops his beer-delivery system we begin to appreciate that what might seem to be a relatively straightforward task is anything but. He takes an off-the-shelf robot and gives it a beer-bottle grabber and ice hopper, but the path from fridge to sofa still needs a little work. The eventual solution involves a lot of trial and error, and a black line on the floor for the ‘bot to follow. Finally, his electronic friend can bring him a beer!

We like [Luis]’s entertaining presentational style, and the use of props as microphone stands. We’ll be keeping an eye out for what he does next, and you should too. Meanwhile it may not surprise you that this is not the first beer-delivery ‘bot we’ve brought you.