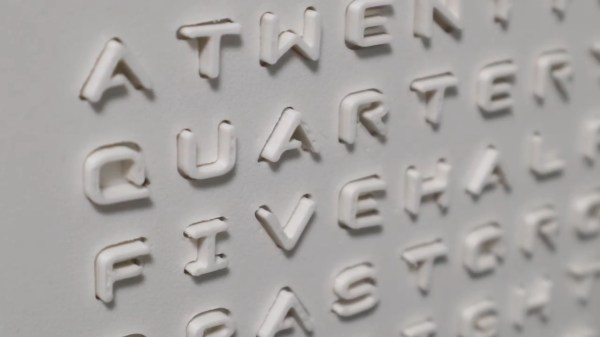

By this point, pretty much everyone has come across a word clock project, if not built one themselves. There’s just an appeal to looking at a clock and seeing the time in a more human form than mere digits on a face. But there are senses beyond sight. Have you ever heard a word clock? Have you ever felt a word clock? These are questions to which Hackaday’s own [Moritz Sivers] can now answer yes, because he’s gone through the extreme learning process involved in designing and building a haptic word clock driven with the power of magnets.

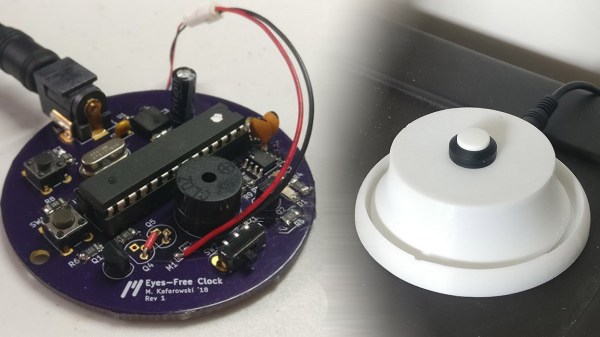

Individual letters of the display are actuated by a matrix of magnetic coils on custom PCBs. These work in a vaguely similar fashion to LED matrices, except they generate magnetic fields that can push or pull on a magnet instead of generating light. As such, there are a variety of different challenges to be tackled: from coil design, to driving the increased power consumption, to even considering how coils interact with their neighbors. Inspired by research on other haptic displays, [Moritz] used ferrous foil to make the magnets latch into place. This way, each letter will stay in its forward or back position without powering the coil to hold it there. Plus the letter remains more stable while nearby coils are activated.

Part of the fun of “ubiquitous” projects like word clocks is seeing how creative hackers can get to make their own creations stand out. Whether it’s a miniaturized version of classic designs or something simple and clean, we love to see them all. Unsurprisingly, [Moritz] himself has impressed us with his unique take on word clocks in the past. (Editor’s note: that’s nothing compared to his cloud chambers!)

Check out the video below to see this display’s actuation in action. We’re absolutely in love with the satisfying *click* the magnets make as they latch into place.