Step sequencers are fantastic instruments, but they can be a little, well, repetitive. At it’s core, the step sequencer is a pretty simple device: it loops through a series of notes or phrases that are, well, sequentially ordered into steps. The operator can change the steps while the sequencer is looping, but it generally has a repetitive feel, as the musician isn’t likely to erase all of the steps and enter in an entirely new set between phrases.

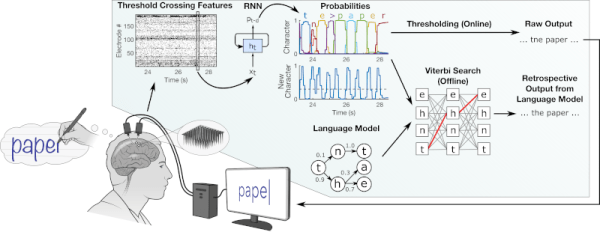

Enter our old friend machine learning. If we introduce a certain variability on each step of the loop, the instrument can help the musician out a bit here, making the final product a bit more interesting. Such an instrument is exactly what [Charis Cat] set out to make when she created the After Eight Step Sequencer.

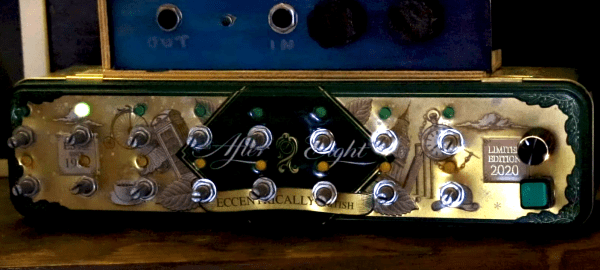

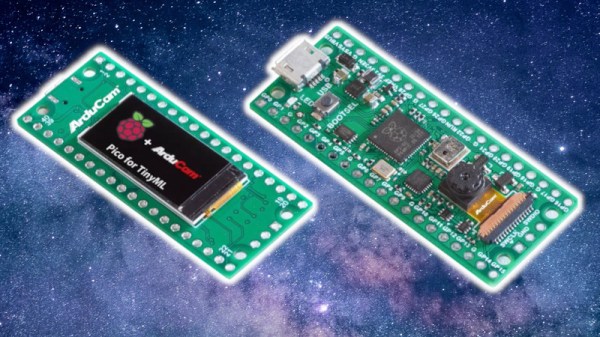

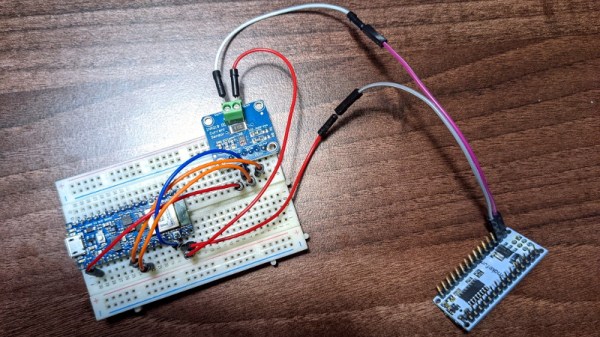

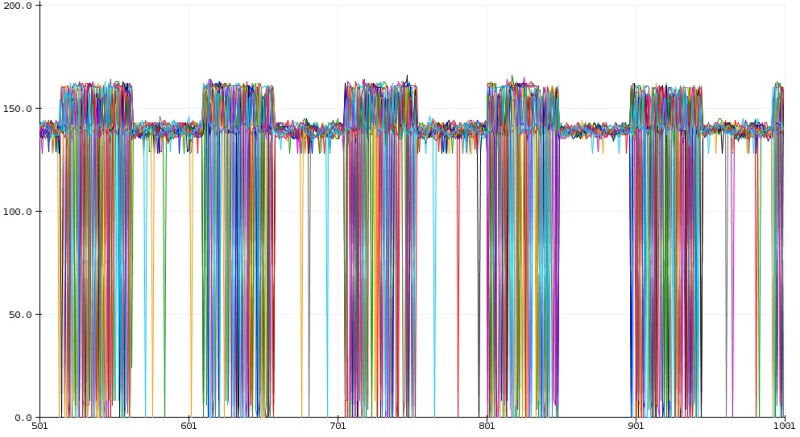

The After Eight is an eight-step sequencer that allows the artist to set each note with a series of potentiometers (which are, of course, housed in an After Eight mint tin). The potentiometers are read by an Arduino, which passes MIDI information to a computer running the popular music-oriented visual programming language Max MSP. The software uses a series of Markov Chains to augment the musician’s inputted series of notes, effectively working with the artist to create music. The result is a fantastic piece of music that’s different every time it’s performed. Make sure to check out the video at the end for a fantastic overview of the project (and to hear the After Eight in action, of course)!

[Charis Cat]’s wonderful creation reminds us of some the work [Sara Adkins] has done, blending human performance with complex algorithms. It’s exactly the kind of thing we love to see at Hackaday- the fusion of a musician’s artistic intent with the stochastic unpredictability of a machine learning system to produce something unique.

Thanks to [Chris] for the tip!

Continue reading “Making Minty Fresh Music With Markov Chains: The After Eight Step Sequencer”