Looking through past hacks is a great source of inspiration. This week, we saw [Russ Maschmeyer] re-visiting a classic hack by [Jonny Lee] that made use of a Wiimote’s IR camera to fake 3D, or at least provide a compelling parallax effect that’ll fool your brain, without any expensive custom hardware.

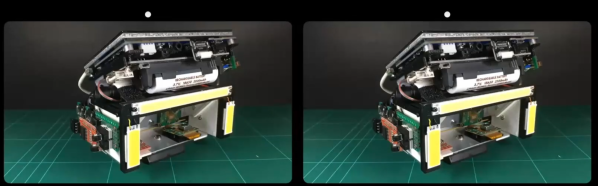

![]() [Lee]’s original demo was stunning, and that alone is reason to revisit it. Using the Wiimote as the webcam was inspired back in 2007, because it meant that there was no hard computer vision work to be done in estimating the viewer’s position – the camera only sees IR LEDs anyway. The tradeoff is that you had to wear two IR LEDs on your head, calibrate it just right, and that only the person with the headset on gets the illusion just right.

[Lee]’s original demo was stunning, and that alone is reason to revisit it. Using the Wiimote as the webcam was inspired back in 2007, because it meant that there was no hard computer vision work to be done in estimating the viewer’s position – the camera only sees IR LEDs anyway. The tradeoff is that you had to wear two IR LEDs on your head, calibrate it just right, and that only the person with the headset on gets the illusion just right.

This is why re-visiting the past can be fruitful. As [Russ] discovered, computing power is so plentiful these days that you could do face/eye position estimation with a normal webcam easier than you could source an old Wiimote. Indeed, he’s getting the positioning so accurate that he’s worried about to which eye he’s projecting the illusion. Clearly, it’s time for a revamp.

So here’s the formula: find a brilliant old hack, and notice if it was hampered by the state of technology back when it was done. Update this using modern conveniences, and voila! You might just find that you can take the idea further, simply because you have more tools in your toolbox. Nothing wrong with standing on the shoulders of giants.

But beware! Time isn’t sitting still for you either. As soon as you make your killer 3D vision hack, VR goggles will become cheap and ubiquitous. So get it done today, before your hack becomes inspiration for the future.