Have you ever thought about getting into digital modes on the ham bands? As it turns out, you can get involved using the affordable and popular Quansheng UV-K6 — if you’re game to modify it, that is. It’s perfectly achievable using the custom Mobilinkd firmware, the brainchild of one [Rob Riggs].

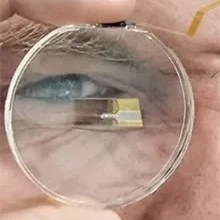

In order to efficiently transmit digital modes, it’s necessary to make some hardware changes as well. Low frequencies must be allowed to pass in through the MIC input, and to pass out through the audio output. These are normally filtered out for efficient transmission of speech, but these filters mess up digital transmissions something fierce. This is achieved by messing about with some capacitors and bodge wires. Then, one can flash the firmware using a programming cable.

With the mods achieved, the UV-K6 can be used for transmitting in various digital modes, like M17 4-FSK. The firmware has several benefits, not least of which is cutting turnaround time. This is the time the radio takes to switch between transmitting and receiving, and slashing it is a big boost for achieving efficient digital communication. While the stock firmware has an excruciating slow turnaround of 378 ms, the Mobilinkd firmware takes just 79 ms.

Further gains may be possible in future, too. Bypassing the audio amplifier could be particularly fruitful, as it’s largely in the way of the digital signal stream.

Quansheng’s radios are popular targets for modification, and are well documented at this point.