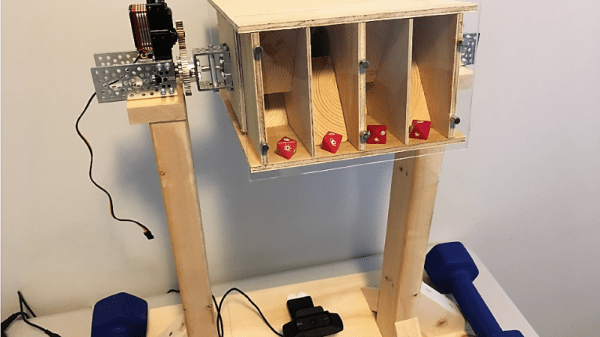

It’s something that can happen to all of us, that we forget things. Young and old, we know things are on our to-do list but in the heat of the moment they disappear from our minds and we miss them. There are a myriad of technological answers to this in the form of reminders and calendars, but [Nick Bild] has come up with possibly the most inventive yet. His Newrons project is a pair of glasses with a machine vision camera, that flashes a light when it detects an object in its field of view associated with a calendar entry.

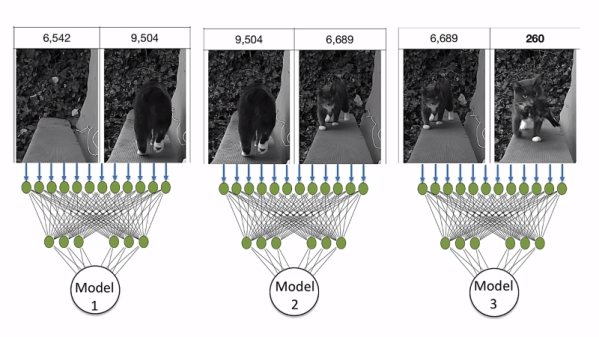

At its heart is a JeVois A33 Smart Machine Vision Camera, which runs a neural network trained on an image dataset. It passes its sightings to an Arduino Nano IoT fitted with a real-time clock, that pulls appointment information from Google Calendar and flashes the LED when it detects a match between object and event. His example which we’ve placed below the break is a pill bottle triggering a reminder to take the pills.

We like this idea, but can’t help thinking that it has a flaw in that the reminder relies on the object moving into view. A version that tied this in with more conventional reminding based upon the calendar would address this, and perhaps save the forgetful a few problems.