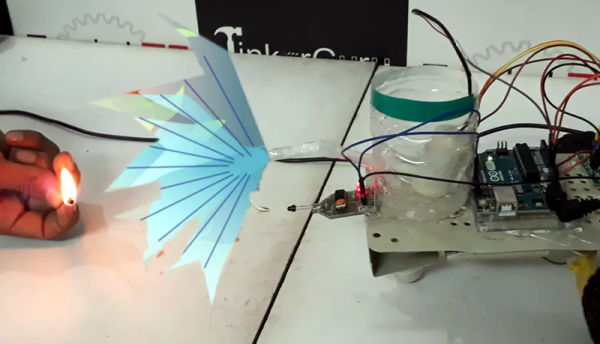

Robots, as we currently understand them, tend to run on electricity. Only in the fantastical world of Futurama do robots seek out alcohol as both a source of fuel and recreation. That is, until [Les Wright] and his beer seeking robot came along. (YouTube, video after the break.)

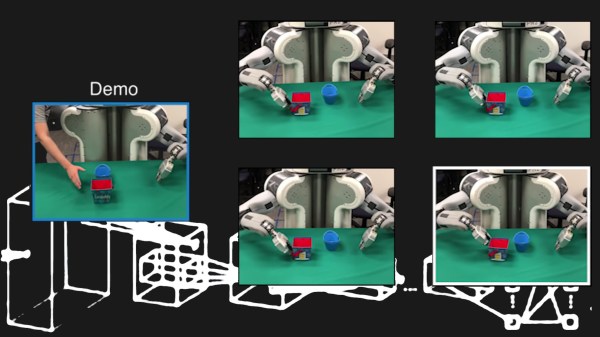

A Raspberry Pi 3 provides the brains, with an Intel Neural Compute stick plugged in as an accelerator for neural network tasks. This hardware, combined with the OpenCV image detection software, enable the tracked robot to identify objects and track their position accordingly.

That a beer bottle was chosen is merely an amusing aside – the software can readily identify many different object categories. [Les] has also implemented a search feature, in which the robot will scan the room until a target bottle is identified. The required software and scripts are available on GitHub for your perusal.

Over the past few years, we’ve seen an explosion in accelerator hardware for deep learning and neural network computation. This is, of course, particularly useful for robotics applications where a link to cloud services isn’t practical. We look forward to seeing further development in this field – particularly once the robots are able to open the fridge, identify the beer, and deliver it to the couch in one fell swoop. The future will be glorious!