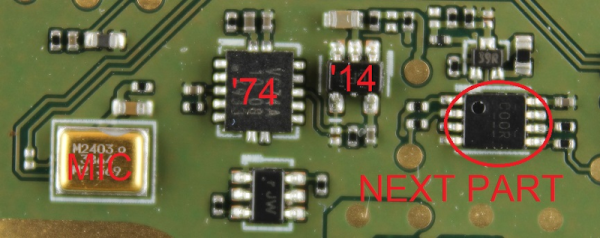

There’s a certain elite set of chips that fall into the “cold, dead hands” category, and they tend to be parts that have proven their worth over decades, not years. Chief among these is the ubiquitous 555 timer chip, which nearly 50 years after its release still finds its way into the strangest places. Add in other silicon stalwarts like the 741 op-amp and the LM386 audio amp, and you’ve got a Hall of Fame lineup for almost any project.

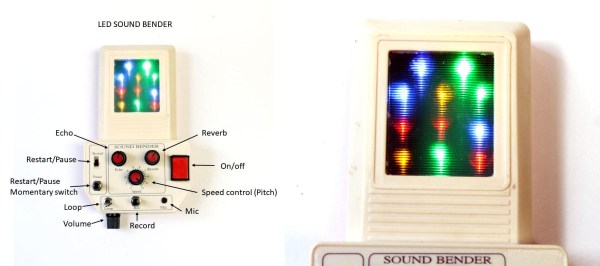

That’s exactly the complement of chips that powers this fun little dub siren. As [lonesoulsurfer] explains, dub sirens started out as actual sirens from police cars and the like that were used as part of musical performances. The ear-splitting versions were eventually replaced with sampled or synthesized siren effects for recording studio and DJ use, which leads us to the current project. The video below starts with a demo, and it’s hard to believe that the diversity of sounds this box produces comes from just a pair of 555s coupled by a 741 buffer. Five pots on the main PCB control the effects, while a second commercial reverb module — modified to support echo effects too — adds depth and presence. I built-in speaker and a nice-looking wood enclosure complete the build, which honestly sounds better than any 555-based synth has a right to.

Interested in more about the chips behind this build? We’ve talked about the 555 and how it came to be, taken a look inside the 741, and gotten a lesson in LM386 loyalty.

Continue reading “Classic Chip Line-Up Powers This Fun Dub Siren Synth”