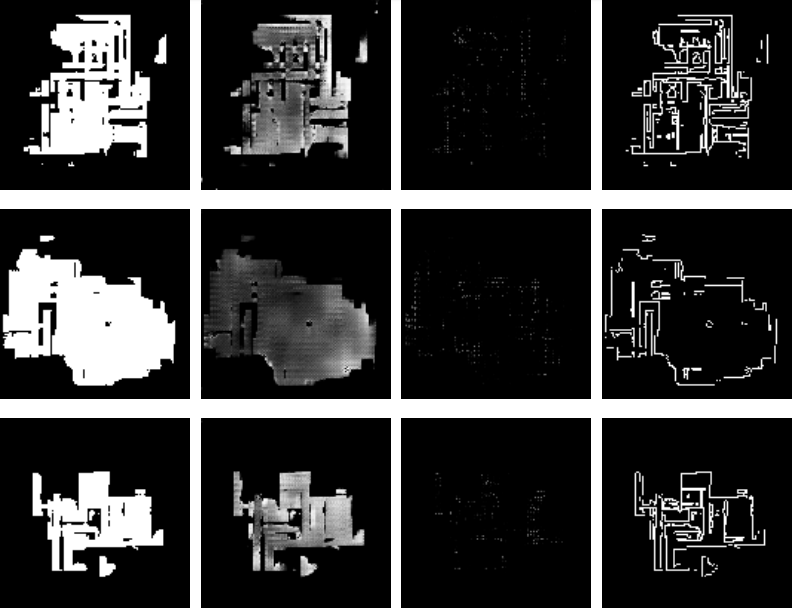

One of the difficulties in learning about neural networks is finding a problem that is complex enough to be instructive but not so complex as to impede learning. [ThomasNield] had an idea: Create a neural network to learn if you should put a light or dark font on a particular colored background. He has a great video explaining it all (see below) and code in Kotlin.

[Thomas] is very interested in optimization, so his approach is very much based on mathematics and algorithms of optimization. One thing that’s handy is that there is already an algorithm for making this determination. He found it on Stack Exchange, but we’re sure it’s in a textbook or paper somewhere. The existing algorithm makes the neural network really impractical, but it makes training easy since you can algorithmically develop a training set of data.

Once trained, the neural network works well. He wrote a small GUI and you can even select among various models.

Don’t let the Kotlin put you off. It is a derivative of Java and uses the same JVM. The code is very similar, other than it infers types and also adds functional program tools. However, the libraries and the principles employed will work with Java and, in many cases, the concepts will apply no matter what you are doing.

If you want to hardware accelerate your neural networks, there’s a stick for that. If you prefer C and you want something lean and mean, try TINN.

Continue reading “Jump Into AI With A Neural Network Of Your Own”